The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

Three key findings:

1. The news source rating tool worked as intended. Perceived accuracy increased for news headlines with a green source cue and decreased for headlines with a red source cue. Participants also indicated they were less likely to read, like or share news headlines with a red source cue. The source rating tool was particularly effective for participants who correctly recalled that experienced journalists devised the ratings, compared with those who did not recall that information.

2. The source rating tool was effective across the political spectrum. The perceived accuracy of news articles with a red source cue decreased similarly among Republicans and Democrats, with the sharpest decline occurring when the headlines had a clear political orientation that matched the users’ political beliefs.

3. The source rating tool did not produce known, unintended consequences associated with previous efforts to combat online misinformation. Our experiment did not produce evidence of an “implied truth effect,” an increase in perceived accuracy for false stories without a source rating when other false stories have a source rating, or a “backfire effect,” a strengthening of one’s false beliefs following a factual correction.

A “red source cue,” however, was not reason enough for survey respondents not to share the article:

Slightly less than half of the participants (44 percent) in the control group said they would share at least one of the false stories. This proportion is significantly higher than in previous survey results, which showed that 23 percent of U.S. adults said that they have shared a made-up news story (either knowingly or not). Our results may suggest that the behavior of sharing misinformation is more widespread than previously reported….

The proportion of respondents who indicated they would share misinformation dropped by 10 percentage points when comparing the control group with those who received a red source cue — but a substantial minority (34 percent) still said they would share at least one false story with a red source cue.

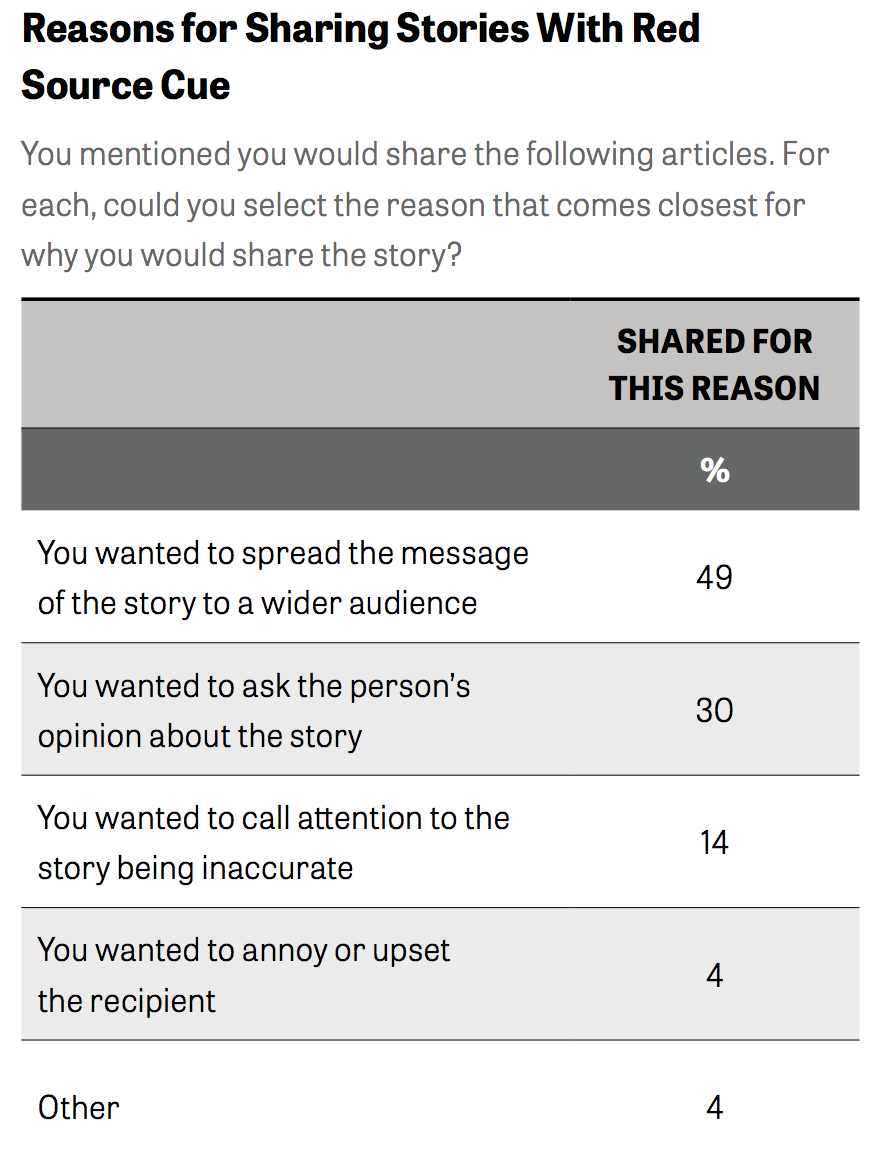

Here are the reasons they gave for sharing the false stories:

I find the “wanted to ask the person’s opinion about the story” sort of troublesome since it seems to suggest that these people are still really only thinking of the “red source cue” as, well, another opinion — like truth is on a spectrum here, and the outside rating is one viewpoint, but whatever person you share it with on Facebook might have a different, equally valid viewpoint. Which would seem to go against the usefulness of a system like this entirely…

“Each new election is a test.” The Washington Post’s Elizabeth Dwoskin took a peek at Facebook’s fact-checking efforts ahead of the Mexican election. (Another big problem in Mexico around the election: Fake news on WhatsApp.)In this case, the most problematic posts are not coming from outside the country but from within it. “The hardest part is where to draw the line between a legitimate political campaign and domestic information operations,” Facebook security executive Guy Rosen said. “It’s a balance we need to figure out how to strike.”

In a talk for security experts in May, Facebook security chief Alex Stamos called such domestic disinformation operations the “biggest growth category” for election-related threats that the company is confronting. These groups, he said, are copying Russian operatives’ tactics to “manipulate their own political sphere, often for the benefit of the ruling party.”

This area is also the trickiest: While most democracies bar foreign governments from meddling in elections, Facebook sees internal operators as much harder to crack down on because of the free-speech issues involved.

Also:

Unlike in the United States, where Facebook’s AI systems automatically route most stories to fact-checking organizations, Facebook relies on ordinary people in Mexico to spot questionable posts. Many people flag stories as false simply because they disagree with them, executives said.

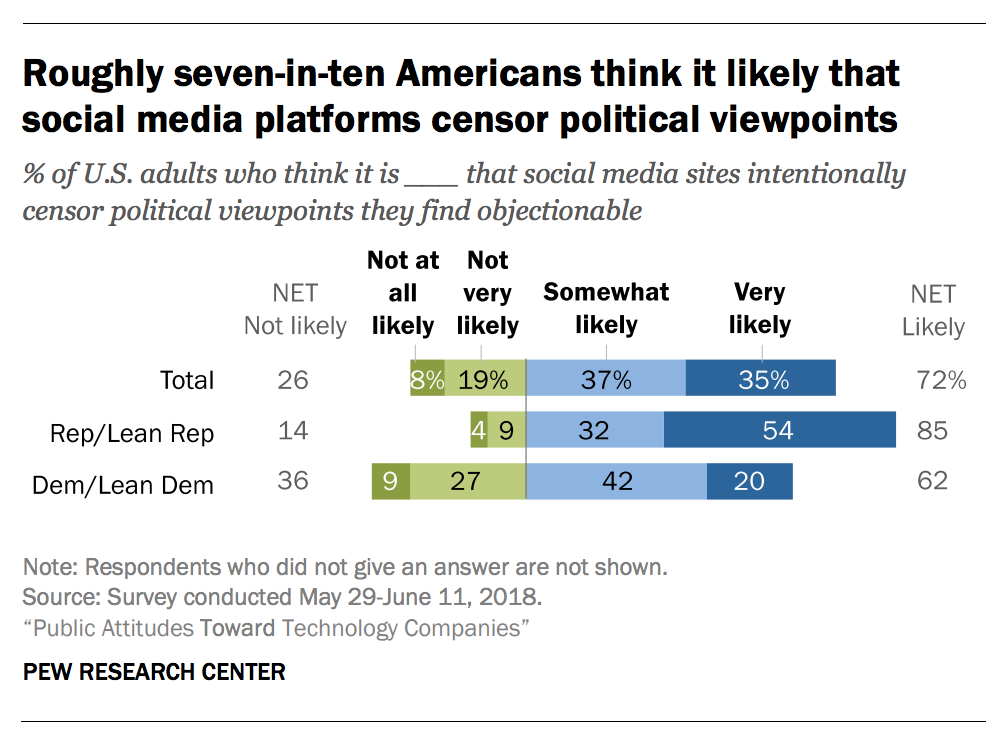

A large majority of Republicans believe that social media platforms are censoring some political views. Pew research out Thursday finds that:

[fully] 85 percent of Republicans and Republican-leaning independents think it likely that social media sites intentionally censor political viewpoints, with 54 percent saying this is very likely. And a majority of Republicans (64 percent) think major technology companies as a whole support the views of liberals over conservatives.

(Pew notes: “In interpreting these findings, it is important to note that the public does not find it inherently objectionable for online platforms to regulate certain types of speech.”)

Facebook and Twitter have held dinners and meetings with conservative leaders who criticize the platforms for being biased, The Washington Post’s Tony Romm reported this week. At Twitter CEO Jack Dorsey’s June 19 dinner with conservative politicians and leaders, for instance,

the Twitter executive heard an earful from conservatives gathered at the table, who scoffed at the fact that Dorsey runs a platform that’s supposed to be neutral even though he’s tweeted about issues like immigration, gay rights and national politics. They also told Dorsey that the tech industry’s efforts to improve diversity — after years of criticism for maintaining a largely white, male workforce — should focus on hiring engineers with more diverse political viewpoints as well, according to those who dined with him in D.C.

Also:

Some of the conservative media commentators and political pundits specifically urged Dorsey during their meetings to take a closer look at Moments, a feature that tracks trending national stories and issues. At both the New York and D.C. dinners, conservative participants said they felt that Twitter Moments often paints right-leaning people and issues in a negative light, or excludes them entirely, according to four individuals who spoke on the condition of anonymity. Dorsey said he would look into the issue, the people said, but did not announce specific changes.

What I find fascinating about the several meet-ups social media has had w/ conservatives, is the feeling that there’s an inherent need for these platforms to be unbiased and run by unbiased folks … as though they’re a public utility https://t.co/vuDbiGJJWT

— Hadas Gold (@Hadas_Gold) June 27, 2018