The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

“Who should be responsible for censoring ‘unwanted’ conversation, anyway? Governments? Users? Google?” Breitbart — yep, leading the column with a Breitbart story! — got leaked a Google presentation, “The Good Censor,” that shows how Google is grappling with the question of whether it’s possible to “have an open and inclusive internet while simultaneously limiting political oppression and despotism, hate, violence and harassment.”

The report is insightful and interesting, and I don’t really see why it should have been leaked instead of Google simply releasing it publicly — it’s good to see that at least some in the company are thinking about these issues in nuanced ways.

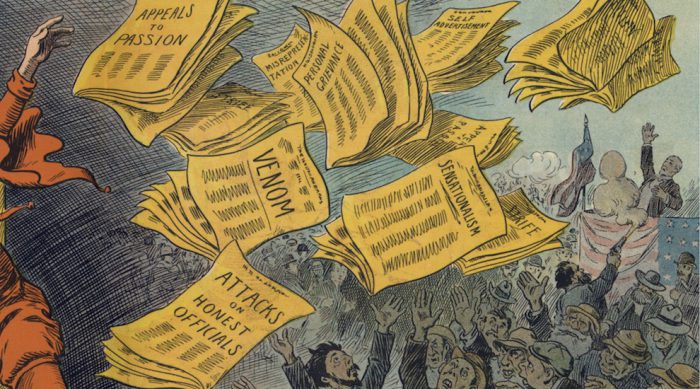

“This free speech ideal was instilled in the DNA of the Silicon Valley startups that now control the majority of our online conversations,” the Google report notes. But “recent global events have undermined this utopian narrative” of the internet as a place for free and uncensored discussion:

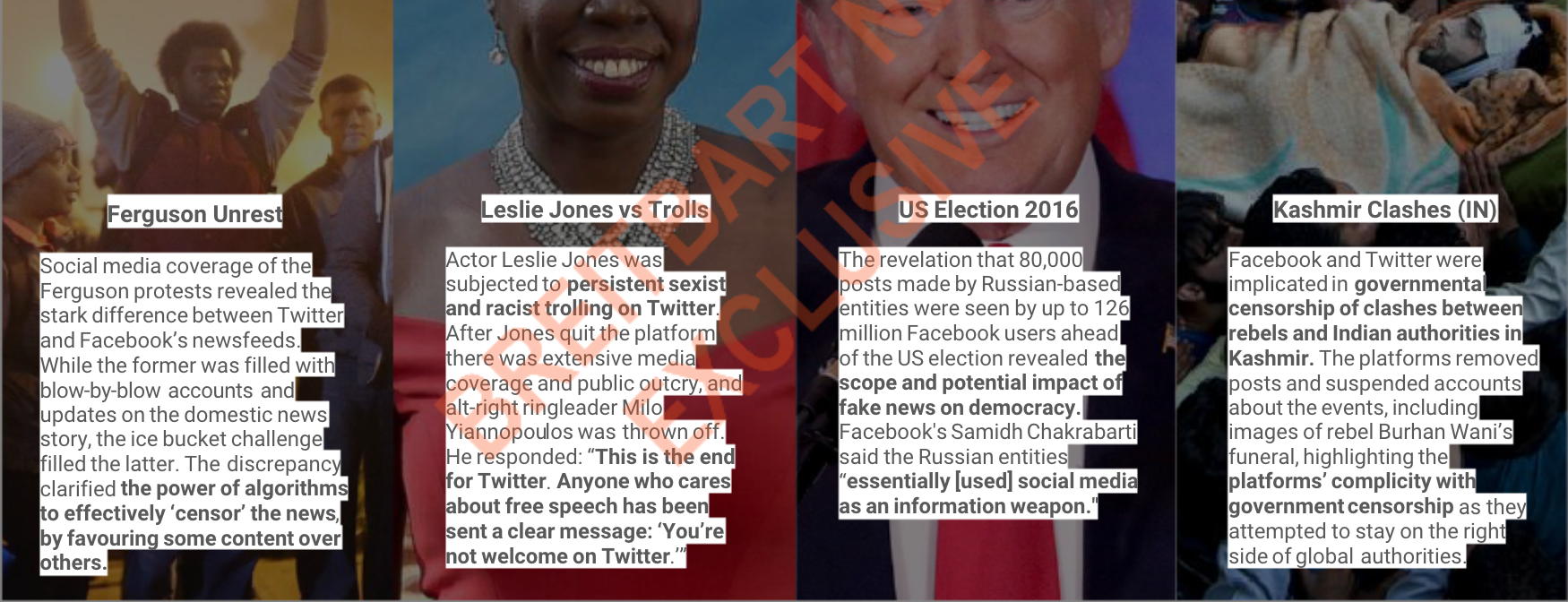

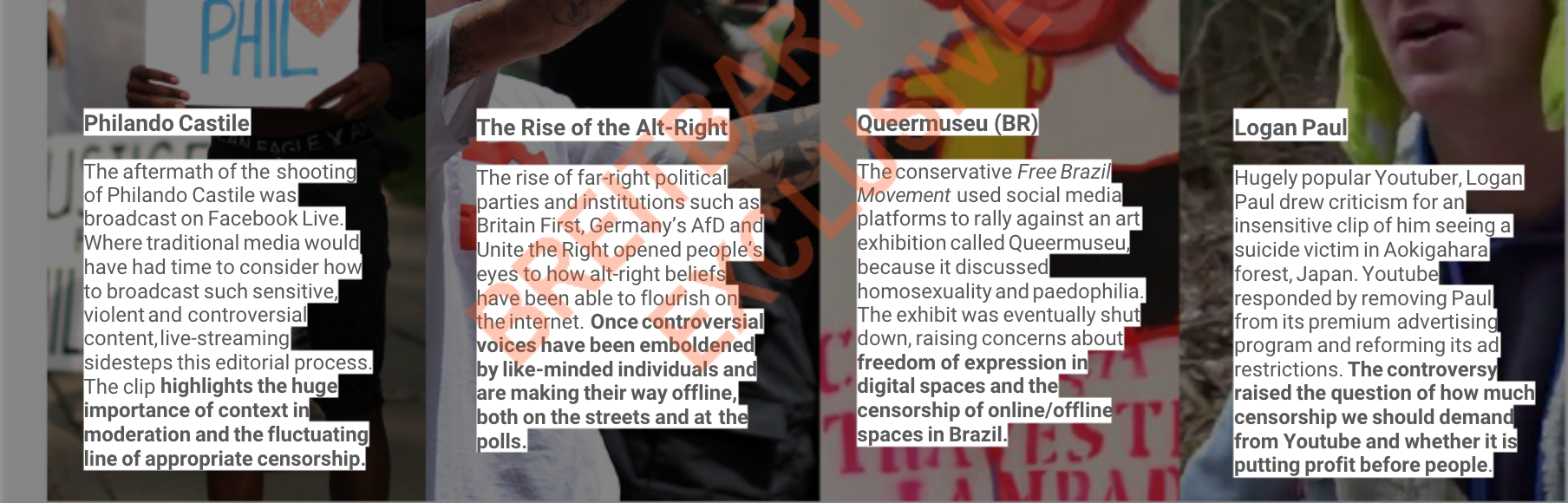

“Users, governments, and tech firms are all behaving badly,” the report says — and offers examples of each of those groups’ bad behavior. Here are some of the examples that Google gives of tech firms acting badly and “mismanaging the issues”:

“Warning! This story describes a misrepresentation of women.” NewsMavens, a news source curated entirely by women at European news organizations, has launched #FemFacts, a fact-checking initiative “dedicated to tracking and debunking damaging misrepresentations of women in European news media.”— Incubating fake news. Untrustworthy sources and misinformation have thrived on tech platforms. Dubious distributors have capitalized on a lack of sense-checking and algorithms that reward sensationalist content. And rational debate is damaged when authoritative voices and ‘have a go’ commentators receive equal weighting.

— Ineffective automation. With 400 hours of video uploaded to YouTube and 340,000 tweets sent every minute, it isn’t surprising that platforms outsource moderation duties to AI and automation. But even the most sophisticated tech can censor legitimate and legal videos in error, while erroneous content can elude the safeguards.

— Commercialized conversation. Shares, likes and clickbait headlines — monetized online conversations aren’t great news for rational debate. And when tech firms have an eye on their shareholders as well as their free-speech and censorship fvalues, the priorities can get a little muddled.

— Inconsistent interventions. Human error by content moderators combined with AI that falls short when faced with complex context mean that digital spaces are rife with users’ frustrations about removed posts and suspended accounts, especially when it seems like plenty of bad behavior is left untouched.

— Lack of transparency. The tech platforms’ algorithms are complicated, obscure, and constantly changing. In lieu of satisfactory explanations for why bad things are happening, people assume the worst — whether that’s that Facebook has a liberal bias or that YouTube doesn’t care about weeding out bad content.

— Underplaying the issues. When faced with a scandal, the tech platforms have often underplayed the scope of the problem until facts prove otherwise. They’ve frustrated users by not giving their complaints and fears the respect and attention they’ve deserved, creating a picture of ill-informed arrogance.

— Slow corrections. From a users’s perspective, the tech platforms are quick to censor and slow to reinstate content that was wrongfully taken down. While the platforms can suspend an account in an instant, users often endure a slow and laborious appeals process, compounding the feeling of unfair censorship.

— Global inconsistency. In a global world, the platforms’ status as bastions of free speech is hugely undermined by their willingness to bend to requirements of foreign repressive governments. When platforms compromise their public-facing values in order to maintain a global footprint, it can make them look bad elsewhere.

— Reactionary tactics. When a problem emerges, the tech platforms seem to take their time and wait to see if it’s going to blow over before wading in with a solution or correction. The lag gives users and governments plenty of time to point fingers, gather supporters, and get angrier.

“We’re not just going to track false news, but also try to have a more nuanced approach to finding stuff like manipulated presentation of facts: misinformation that’s not false, but skewed,” Tijana Cvjetićanin told Poynter’s Daniel Funke. Their first fact-checks are here.

Will California’s media literacy law for schools backfire? At the end of September, California passed a bill (SB 830) that “encourages” media literacy education in public schools by requiring “the state Department of Education’s website to list resources and instructional materials on media literacy, including professional development programs for teachers.” But Sam Wineburg, who’s done some great research on how bad people (even historians with Ph.Ds) are at doing basic online fact-checking, worries that “the solutions [California legislators] propose are more likely to exacerbate the problem than solve it” — since the links on the site are likely to go to checklists and other outdated approaches. Wineburg wrote recently in The Washington Post:In the short term, let’s teach web credibility based on what experts actually do. We should teach a few choice strategies and make students practice them until they become habitual — in the words of search specialist Mike Caulfield, the equivalent of looking in the rearview mirror when switching lanes on the highway. Such strategies won’t eliminate every error, but they’ll surely take a bite out of the costliest ones.

And while we’re at it, let’s not forget that shoehorning an hour of media literacy between trigonometry and lunch is only a stopgap measure. The whole curriculum needs an overhaul: How should we teach history when Holocaust deniers flood cyberspace with concocted ‘evidence’; or science, when anti-vaxxers maintain a ‘proven’ link between autism and measles shots; or math, when statistics are routinely manipulated and demonstrable effects disappear with graphs that double as optical illusions?

Also check out this interview with Caulfield, who runs the Digital Polarization Initiative at Washington State University, Vancouver, with the goal of teaching web literacy to undergrads. One part I liked:

I think we overestimate the echo-chamber, actually. But the way I think about tribalism in general is it’s a way to filter information. It’s a way of dealing with information overload. To attack it, you need better and relatively effort-free alternatives to quickly sort information. And so we find in our classes that many students become less tribal in how they evaluate, because they have new filters. They go from ‘I would trust this video because Democrats did put coal workers out of work’ to ‘This video has some good statistics, but on investigation turns out to be put out by the coal industry, I’d rather see a better source.’

As far as correcting people, think of the audience for the correction being other people who look at the post who might be a bit more persuadable. Acknowledge that the feelings that the person may be expressing with the post are valid, but that the particular thing shared is not a good selection. Let people know that it’s OK to express their feelings with these things, and point them to sources that help them do that while maintaining the integrity of the information environment.