One by one, people plopped onto the red couch and told us what they thought of live fact-checking.

They watched video clips from two State of the Union addresses that we had specially modified. When the presidents made factual claims, fact checks popped up on the screen.

Our October experiment in Seattle was groundbreaking and revealing. People have long mused about live fact-checking on television (and there have been experiments with non-televised ways of performing live fact checks), but this marked the first in-depth study. It revealed our product could have tremendous appeal — but we need to explain it better to our users.

The test was part of our Duke Tech & Check Cooperative and was conducted by Blink, a company that specializes in user experience consulting. Blink conducted hour-long sessions with a mix of 15 Republicans, Democrats and independents. Most sessions were in a “simulated living room” in Seattle that was home to a bright red couch. Some people took part remotely through video links.

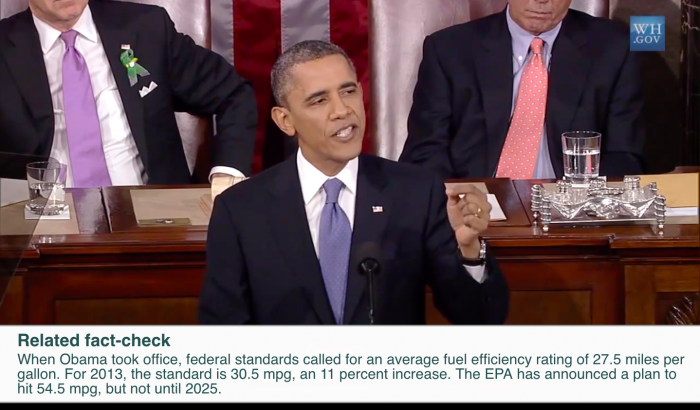

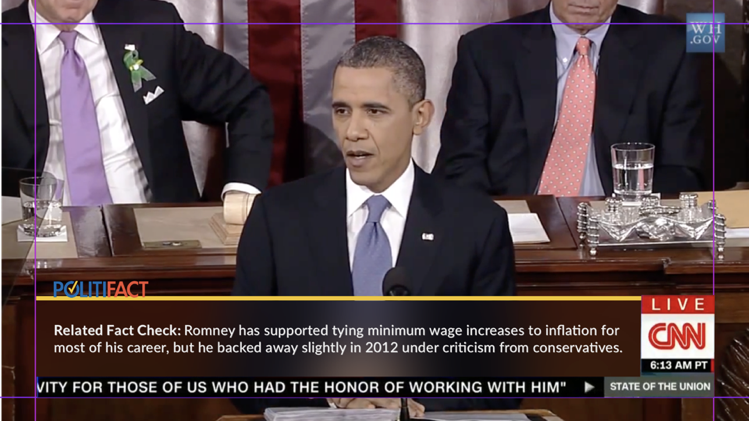

The participants saw four-minute excerpts of speeches by Barack Obama and Donald Trump that had been retrofitted with actual fact checks. We could manipulate the displays to show the checks in different formats — with a Truth-O-Meter rating from PolitiFact, with rating words such as “true” or “false,” or with just a “summary text” of facts that mostly left it to viewers to figure out if the claim was accurate.

Our most important finding: People love this new form of TV fact-checking. Participants were unanimous that they would like a feature like this during political events such as debates and speeches. They said it would hold politicians more accountable for what they say. Some of the participants said they liked the service so much that they would choose a network that offered fact-checks over one that did not.The participants also gave us some valuable feedback about the displays. They said they wanted the fact-checks displayed at the bottom of the screen rather than in one of the corners, and that they should appear one to two seconds following a statement.

We also found plenty of confusion. At least a few people wrongly assumed that when a politician made a factual claim but a fact check did not appear, that meant the statement had been rated true. It actually just meant it had not been checked.

Also, our generic presentation of the fact checks left many viewers wondering who wrote them. The TV network? Someone else?

One participant told Blink she likes to “fact-check the fact check.” But to do that, she needs to know what organization is providing the information.

“Because there’s nothing there that says who it’s coming from, my interpretation is that it’s coming from the same people who are broadcasting this.”

The experiment was done under ideal conditions.

We used real fact checks that were published hours or even days after the speeches and we created summaries that popped up as if they had been instantly available at the moment the statements were uttered. Those conditions will rarely occur with the products we’re now developing, which are linking live speech to previously published fact checks.

The participants recruited for the study, all of whom expressed respect for journalistic objectivity, generally agreed on several aspects of the experiment, including our use of the label “Related Fact Check,” which they felt was intuitive. But we found no consensus about ratings such as true and false and PolitiFact’s Truth-O-Meter versus the just-the-facts option, which did not provide an assessment of whether the statement was accurate.

People were split over whether they preferred the just-the-facts option (eight people), or rating words/devices (seven). This finding is also complicated because we modified the text in the just-the-facts option after the first day of testing in response to comments from the early viewers.

The summary text had been a little skimpy the first day, but we beefed it up in subsequent days in an option we called “fact-heavy.” One example: “During the period Obama chose — from January 2010 to January 2013 — manufacturing jobs rose by 490,000. Those gains replaced less than 10 percent of the jobs lost during the decade of decline.”

The 8-7 split raises a fundamental question about what people want from our new service: Do they want fact-checking? Or just facts?

In other words, do they want us to summarize the accuracy of a statement by giving a rating? Or do they just want the raw materials so they can do that work themselves?

I should note that this is a personal issue for me because I’m the inventor of the Truth-O-Meter and have always envisioned that automated fact-checking would feature my beloved meter. But last summer I acknowledged my conflict and said I would be open to automation without meters.

A few participants said they were concerned about bias behind the fact-checking. As one participant put it, “If I’m going to tune into something that is going to have a fact-checking supplement, I want it to be free from as much bias as possible.”

Those concerns are real. Some might be addressed through transparency (i.e. “This fact-checking provided by The Washington Post”), but other viewers simply may not trust anyone and just want the raw facts.

One option here could be different products: summaries with ratings for people who want the benefit of a conclusion; raw facts for the do-it-yourselfers.

We learned a lot from the red couch experiments. The strong interest in our product is a wonderful sign.

We also came away with valuable suggestions about the location of pop-up messages. Viewers want them at the bottom of the screen where they’re accustomed to getting that kind of information, not in the corners.

Our biggest takeaway was about the need to remedy the confusion that many viewers had about the source of the information and the protocol about when it will appear. We need to tell viewers what they’re getting and what they’re not.

Blink mocked up how a CNN screen might look with the logo of a fact-checker to make clear that the content was not provided by CNN. That kind of subtle design could be a simple way of addressing the problem.

The viewers’ confusion about statements when no fact check appears is understandable because we didn’t provide viewers with any guidance. We’ll need to devise a simple way to educate them about when they should expect a pop-up.

We also need to explore the interesting divide about the just-the-facts approach vs. ratings and rating devices such as a Truth-O-Meter. Some people like ratings, while others clearly don’t. Can we offer a single product that satisfies both audiences? Or should we offer some kind of toggle switch that allows people to choose ratings or just facts?

There’s a joke in academia that studies always conclude that more research is needed. The phrase has its own Wikipedia page, it has spawned T-shirts and, of course, research.

But in our case, we still have important things to explore. More research is needed.

Bill Adair is creator of PolitiFact and the Knight Professor for Journalism and Public Policy at Duke University.