The Atlantic is launching a new skill for Amazon Echo and Google Home: A “single, illuminating idea” every weekday. From the release:

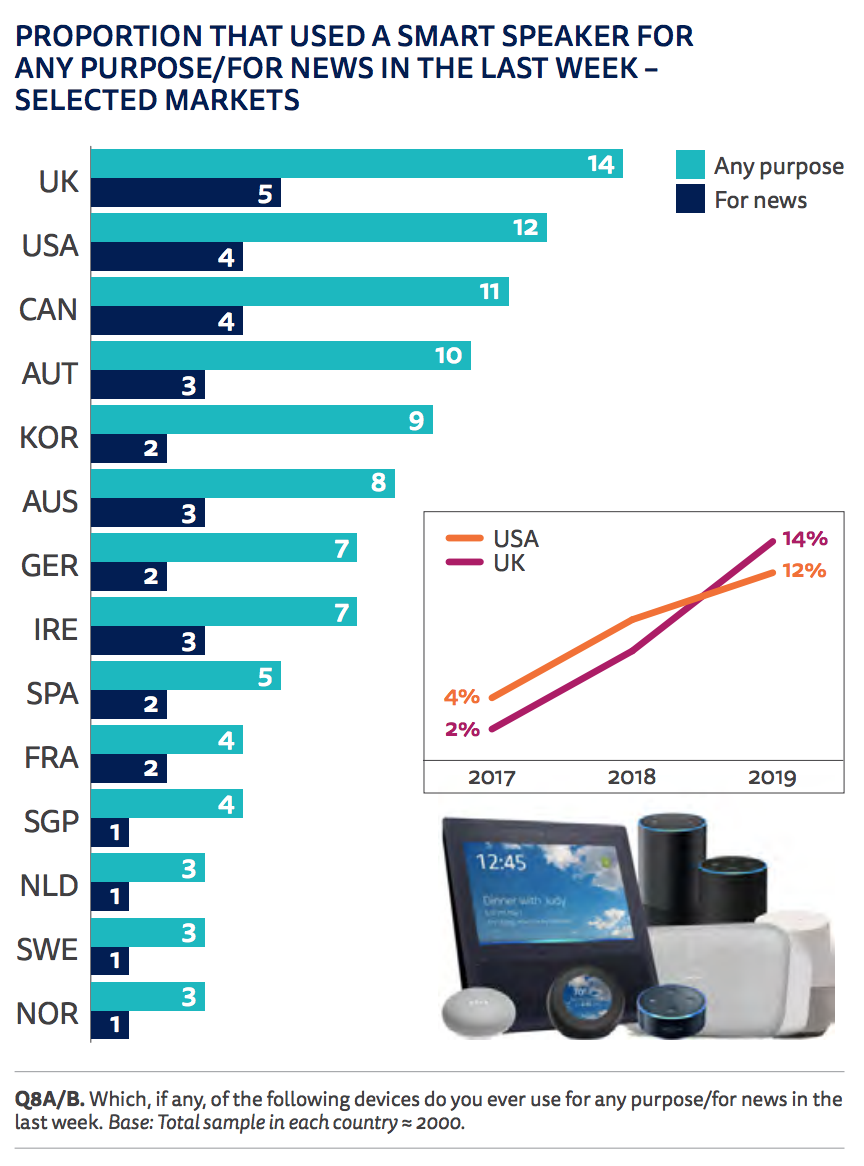

The Atlantic’s briefing joins a number of other offerings from publishers. But while ownership of the devices is increasing — an estimated 65 million U.S. adults, around 23 percent of the population over 12, own one; 12 percent of U.S. adults said they used one in the past week, per the new Reuters Digital News Report, and 14 percent of U.K. adults — the percentage of people who use them for news is quite a bit smaller. People still mostly use them for music and the weather.Every weekday, when people ask their smart speakers to play The Atlantic’s Daily Idea, they’ll hear a condensed, one-to two-minute read of an Atlantic story, be it “An Artificial Intelligence Developed Its Own Non-Human Language” or “The Case for Locking Up Your Smartphone.” The skill will include reporting from across The Atlantic’s science, tech, health, family, and education sections, as well as the magazine’s archives, representing the work of dozens of writers.