The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

“Something that Facebook has never done: ignoring the likes and dislikes of its users.” I really liked this recent BuzzFeed essay, “Donald Trump And America’s National Nervous Breakdown: Unlocking your phone these days is a nightmare,” in which Katherine Miller writes: “There’s so much discordant noise that just making out each individual thing and tracking its journey through the news cycle requires enormous effort. It’s tough to get your bearings.”

I thought about this piece when I was trying to decide how to cover Facebook’s zillion tiny ongoing announcements and initiatives to track fake news: It’s hard to keep them all straight, to remember which are fully new and which are just incremental, and to recognize which are actual shifts in the company’s previous stated positions.

Farhad Manjoo tracked some of those shifts in this week’s New York Times Magazine cover story, “Can Facebook Fix Its Own Worst Bug?” for which he interviewed Mark Zuckerberg multiple times. From the piece:

But the solution to the broader misinformation dilemma — the pervasive climate of rumor, propaganda and conspiracy theories that Facebook has inadvertently incubated — may require something that Facebook has never done: ignoring the likes and dislikes of its users. Facebook believes the pope-endorses-Trump type of made-up news stories are only a tiny minority of pieces that appear in News Feed; they account for a fraction of 1 percent of the posts, according to [Adam Mosseri, VP in charge of News Feed]. The question the company faces now is whether the misinformation problem resembles clickbait at all, and whether its solutions will align as neatly with Facebook’s worldview. Facebook’s entire project, when it comes to news, rests on the assumption that people’s individual preferences ultimately coincide with the public good, and that if it doesn’t appear that way at first, you’re not delving deeply enough into the data. By contrast, decades of social-science research shows that most of us simply prefer stuff that feels true to our worldview even if it isn’t true at all and that the mining of all those preference signals is likely to lead us deeper into bubbles rather than out of them.

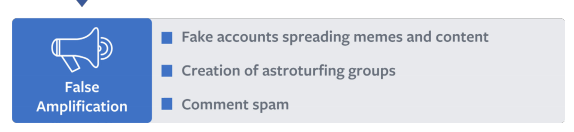

On Thursday, Facebook released a white paper outlining some new ways in which the company has “[expanded] our security focus from traditional abusive behavior, such as account hacking, malware, spam, and financial scams, to include more subtle and insidious forms of misuse, including attempts to manipulate civic discourse and deceive people.” One thing the paper focuses on is “false amplification.”

“False amplifier accounts manifest differently around the globe and within regions,” the authors write. But “in the long term, these inauthentic networks and accounts may drown out valid stories and even deter people from engaging at all.” Among other things, this may include “fake account creation, sometimes en masse”; “coordinated ‘likes’ or reactions”; and

Creation of Groups or Pages with the specific intent to spread sensationalistic or heavily biased news or headlines, often distorting facts to fit a narrative. Sometimes these Pages include legitimate and unrelated content, ostensibly to deflect from their real purpose.

This stuff is coordinated by real people, Facebook says — not bots.

We have observed that most false amplification in the context of information operations is not driven by automated processes, but by coordinated people who are dedicated to operating inauthentic accounts. We have observed many actions by fake account operators that could only be performed by people with language skills and a basic knowledge of the political situation in the target countries, suggesting a higher level of coordination and forethought. Some of the lower-skilled actors may even provide content guidance and outlines to their false amplifiers, which can give the impression of automation.

The authors say that the reach of false amplification efforts during the 2016 U.S. election was “statistically very small compared to overall engagement on political issues.” It also says that it’s taken action against more than 30,000 fake accounts in France. (There’s more about Facebook and the French election here.)

“People were really shellshocked.” Google’s “Project Owl”, announced this week, aims to get offensive and/or false content out of AutoComplete and Featured Snippets. Here is Search Engine Land’s Danny Sullivan:

‘Problematic searches’ is a term I’ve been giving to a situations where Google is coping with the consequences of the ‘post-truth’ world. People are increasingly producing content that reaffirms a particular world view or opinion regardless of actual facts. In addition, people are searching in enough volume for rumors, urban myths, slurs or derogatory topics that they’re influencing the search suggestions that Google offers in offensive and possibly dangerous ways…they pose an entirely new quality problem for Google.

Problematic searches make up only a fraction of Google’s overall search stream, but bring the company a huge amount of terrible press. “It feels like a small problem,” Google Fellow Pandu Nayak told Sullivan. “People [at Google] were really shellshocked [by the bad press]…It was a significant problem, and it’s one that we had, I guess, not appreciated before.”

Also, see Sullivan’s big piece from earlier this month: “A deep look at Google’s biggest-ever search quality crisis.” (Meanwhile, Klint Finley at Wired wonders whether Google should really be trying to provide “one true answer” in the first place.)

While we’re talking about “significant” levels of things… A Harvard Kennedy School Institute of Politics poll of 2,654 Americans ages 18–29 found that, on average, they believe that a whopping 48.5 percent of the news in their Facebook feed is fake:

And “Republicans believe there is more (56%) ‘fake news’ in their feed, compared to Democrats (42%) and Independents (51%).”

This is kind of nuts. Back to Farhad Manjoo’s piece at the top of this post: Facebook believes that the fraction of the news in its News Feed that is fake is “a fraction of 1 percent.” But back to BuzzFeed’s Miller: “Trying to find your way under the crush — to determine the truth amid the complexities of protocols, regulations, legislation, ideology, anonymous sources, conflicting reports, denials, public statements, his tweets — it’s too much. We can’t live like that!”