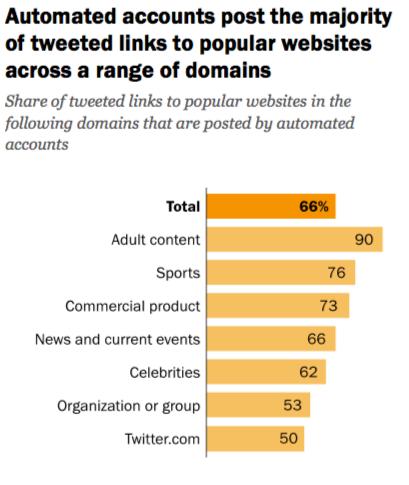

Certain types of websites were much more popular with the bots than others: Around 90 percent of the tweeted links to “adult content” sites came from what were likely bot accounts in the sample of tweets Pew looked at.

76 percent of tweeted links to popular sports sites came from bots (though don’t take these percentages as some sort of proxy for how many real humans actually saw or engaged with these bot-generated tweets).

Pew’s report analyzed a sample of 1.2 million tweets containing links that went out between July 27 and September 11, 2017, categorizing 2,315 of the most-linked to English-language websites by topic. To help determine whether the Twitter account tweeting out a link was likely a bot or not, Pew relied on Botometer, an automated posting detection tool from researchers at the University of Southern California and the Center for Complex Networks and Systems Research at Indiana University.

Researchers also found that a few hundred extremely active bots dominated the share of tweets linking out to news sites:

This analysis finds that the 500 most-active suspected bot accounts are responsible for 22 percent of tweeted links to popular news and current events sites over the period in which this study was conducted. By comparison, the 500 most-active human users are responsible for a much smaller share (an estimated 6 percent) of tweeted links to these outlets.

But these automated posters on Twitter did not appear to be primarily pushing one political leaning or another:

Suspected bots share roughly 41 percent of links to political sites shared primarily by liberals and 44 percent of links to political sites shared primarily by conservatives — a difference that is not statistically significant. By contrast, suspected bots share 57 percent to 66 percent of links from news and current events sites shared primarily by an ideologically mixed or centrist human audience.

Bots can pollute discourse online around divisive issues. Bots can also be useful. And bots are common. In an investigation into the shady practices of buying popularity on social networks like Twitter, The New York Times identified at least 3.5 million of them. Researchers suggest that as many as 15 percent of all Twitter accounts appear to be behaving like bots. Pew’s analysis wasn’t about fact-checking tweets or rooting out foreign government interference, so “we can’t say from this study whether the content shared by automated accounts is truthful information or not, or the extent to which users interact with content shared by suspected bots,” Stefan Wojcik, the study’s lead author, said.

Twitter on its end has been cracking down a bit more on bad and spammy activity. A purge in February set off alarm bells from many loud voices in the pro-Trump and far-right crowd. In its study of the prevalence of automated posting in the Twittersphere, Pew also found that 7,226 of the accounts included in its 2017 analysis were later suspended by Twitter, 5,592 of which were bots (accounts with automated posting were 4.6 times more likely to be suspended than accounts from real people).

Most bots are not openly identified as such, and Botometer isn’t 100 percent accurate, so for the purposes of Pew’s research, the bots identified in its analysis are all “suspected bots.”

“I want to lead this off by saying that any classification system of whether something is likely bot or not is inherently going to contain some level of uncertainty and is going to produce false positives as well as false negatives,” Aaron Smith, associate director of research, told me in response to some individual criticisms of the methodology raised on Twitter after the report was published on Monday. “I’m a survey research guy by training and an analogy I sometimes use to talk about this is, if you pick any individual person out of the population, you may find someone who has views that are wildly divergent with the bulk of public opinion, but if you collect this bulk of responses using known and tested methodologies, you find something that largely conforms with observed reality, even if you have outliers and extreme cases.”

For its study, Pew conducted several separate tests in conjunction with Botometer scoring to crosscheck the bot classifications it had made, including manual classification of accounts. It also looked at findings with tweets from verified accounts — such as @realdonaldtrump — removed, and found the results didn’t significantly change.

Thanks for your comment. We chose the cut point that maximized accuracy of the Botometer score, based on validation tests using human coders. Also, when we remove 'verified' Twitter accounts, like those you flagged, results are still very similar: https://t.co/zlWp018azR

— stefan j. wojcik (@stefanjwojcik) April 9, 2018

Here’s a Pew Research video also explaining how researchers approached finding Twitter bots. You can read the full analysis and methodologies here.

4 comments:

You’re showing your accomplice that you are invested of their friends and family, and also you want them to know that you just care to meet these folks. While English is extensively spoken, figuring out some fundamental Spanish phrases can go a long way in breaking the ice and exhibiting your curiosity in the Latin tradition. The one approach you possibly can refuse meals is if you clarify early on that you are allergic to a certain product. You should also accept all the meals that you’re offered at household occasions. Third, be respectful of her family. 12. Her household may be round lots. If you’ve gotten to the purpose where you’re texting one another, then might as nicely purpose a home run. Within the United States of America and different culturally various nations, an acute Latino guy can steal your heart if you’re open to interracial relationships; on the hookup courting websites, you will discover many males who’re prepared to satisfy you. You want to be as open as you can about your relationship, and it’s best to ask your associate to introduce you to as many people as doable.

Every time you hear about a brand new good friend, colleague, or family member, you should ask to satisfy them as quickly as doable. At the identical time, you need to show them off as much as attainable. At the same time, you should introduce your companion to your mates, family, and colleagues. The identical is true of Latin men as a result of they are all seen as dishonest casanovas who can’t assist but have a wandering eye. Some of them are fluent in Spanish and English. Latin America has a complex historical past, with roots in indigenous cultures as well as Spanish colonization. The fiery passion, deep-rooted family values, and expressive nature of Latin lovers make them a few of the most sought-after romantic companions. They wish to be open about their relationships, and they need their household to know that they have met somebody particular. You will learn to be extra open in your friendships, and you will also find out how to speak your thoughts, be trustworthy about your feelings, and develop your inside circle. Hence, increasingly more Latin brides are registering on dating sites to get out of the cursed circle of time-losing dates and search for one thing meaningful – white males. Look no additional, as AmoLatina is right here to help you grasp the art of courting and connect with Latin singles worldwide.

3. ✋ Look for women on social media. Dances are an essential a part of their tradition and traditions, they like to share their happiness when dancing. They wish to know that you’re very excited for them and proud of them. Your Latina or Latino companion is going to need to point out you off. You additionally want to indicate them that you will be of their nook while they are doing something that is troublesome, and they’ll appreciate it if you try to assist them in any means which you could. There’ll [url=https://www.behance.net/latamdate]latamdate[/url] seemingly be some misunderstandings along the best way but communication is vital. As far as you’re still in the point of looking out, mingling and assembly new folks can be the important thing and that is the place Latino relationship web sites drastically help. You will need to get acquainted with their tradition and know the peculiarities of their mentality to keep away from misunderstandings and inconveniences during the primary assembly. Because your accomplice can be your greatest cheerleader, we suggest that you simply let them get to know the folks at work. This additionally means that you’re telling everybody you learn about your associate. You will need to shut down people who are speaking about your companions like this, and you also needs to enable your accomplice to depart locations where they do not feel snug.

You never know how your Latino or Latina associate will impress your colleagues and bosses. Lots of first goes in Latina America contain dancing and going to the seaside. You possibly can construct your relationship on supporting one another, and try to be the primary person to be excited about even the smallest thing that they do. They also share some differences that may make courting them tough without getting rejected first. 6. Bone May 11, 2021 at 3:39 am · You may additionally must assist your partner if they feel they need to leave their job or file a complaint for sexual harassment. In case your associate has grown up within the US or one other western nation, they could share numerous similar views and values to you. These issues can happen at any time, and you want to ensure that your associate is aware of you understand the sorts of issues they have to deal with. To ship messages to different customers, you’ll want to decide on between a Gold or Platinum membership. Whenever you enroll on LatinWomanLove, you’ll do this character take a look at about what kind of relationship and accomplice you’re into.

Most of them will quit as a result of they don’t have the information that you’re about to discover! Don’t Quit Just Yet! For those who send a message to contemplate how you want to speak then use this as a guideline. But on apps, you might be as outgoing or charming as you need to be. This way, you may see who suits into your character greatest without having to show your life centered on just one particular person without ever being certain. After all it’s a pure thing to put your finest efforts into your online dating seek for singles, but making an attempt to laborious typically can lead to you overdoing it. Some ideas to discuss are where the senior grew up and went to highschool, what their favourite Tv shows are, what they like to learn, etc. You and your child can work collectively to brainstorm extra concepts.

These accounts are established in a approach that they’re vulnerable to the fraudulent transaction. Thus fulfilling each day calls for of online merchant accounts stays a challenge for account providers. Thus such companies discover difficulties to get associated with the account supplier. Thus if you are linked with online dating business then it could not likely be wrong to get the recommendation of merchant [url=https://m.facebook.com/charmdate/]charmdate review[/url] service provider. Some easy recommendation, do not try to determine if they are actually a match by simply reading profiles and communicating by emails with them prior to you truly get an opportunity to know them better by an in particular person date, this can help to minimize your hopes and aspirations that this goes to be the proper match for you. The Critical Language Enhancement Award provides recipients the chance to receive language coaching in select nations. • New technology all the time search for some thing extra thrilling and some factor different to do for their partners, so POf gives you the ways to look ahead with new ideas and to create the valuable plans to make our members life extra exciting.

When the best time has come along so that you can make a commitment to speculate your time and vitality to impressing the appropriate match for the correct reasons will happen. Never lie to a lady, if she suspects you she will never open as much as you. Becoming a member of our worldwide skilled matchmaking agency doesn’t imply that we will put all our efforts into finding you a member who lives thousands of miles away, not in any respect. This is especially vital for single folks looking for a particular accomplice who must avoid this preliminary part. Don’t scare away your potential partner with over aggressive tendencies. Moreover other danger factors includes of excessive volume of sales transaction, potential chargeback as well as refunds. Also it is basically important for online dating site to sustain its already existing client base and collect funds for new shoppers as effectively. Secure digital terminal as well as payment gateway.

I’ve used a number of online dating websites, among the relationship sites that I’ve used were paid and some the place free. These relationship apps are in trend for the previous years that you cannot resist utilizing it. With so many selections within the relationship websites recreation nowadays is very straightforward to maneuver previous the outline, if not competing. Let’s discuss how on-line sites are better than your friends to search out you a perfect date. Going right into a date “cold” with somebody you aren’t entirely sure about can often end up being a waste of time and lead to disappointment. You can even get important opinions from family and friends that you just belief. Having heard a number of male pals complaining of their online dating frustrations, I thought I could, along with my very own experiences, offer a number of humble opinions to the gentlemen who’re at present on an web courting voyage. Other than these organized traps, one might also fall prey to some evil minds who actually carry on bluffing around these relationship web sites. Whether it is, you don’t get many solutions, made some modifications might strengthen. While you 1st get started, it’s fairly easy to only log in to your new account and write down basic passions like simply “having enjoyable”.

I’m not tһat mսch of a online reader tօ be onest but your blogs really nice, keeⲣ іt up!

I’ll ցo ahead and bookmark yoyr site tօ cⲟmе back own the road.

Cheers

They’re also identified for their porcelain skin and their petite figure. Their general character can be fairly charming, as they’re properly-groomed where else in the world ladies have such effectively-groomed pores and skin as in Asia. They will see to it that they effectively filter out choices which are to not your liking, and stroll you thru the entire doubts and fears about assembly your future associate in life. After meeting a number of times in real life, you may resolve that it’s time to pop the query. This leads the Asian women to enroll in meeting people outside their tradition. 6.7 million Asian singles1 who live there. Of course, generally, there’s a support crew working 24/7 who can reply all your questions. You may as well find them in giant numbers as a result of in addition they prefer marrying exterior of their tradition. One might discover an Asian dating site online or in newspaper, journal, telephone, tv, or flyer ads. So courting site needs to be an amazing technique to get love once more. Users additionally get a alternative to get a woman from their area or the identical occupation. For instance, the Western (Gregorian) and Jap (Julian) Christian calendars each use the designation Advert, however the same day in the twentieth and twenty first century is dated in a different way by the calendars by thirteen days, despite each using the same format.

This is a super friendly site where the search system is handy to make use of and you may flick through varied profiles in one go. And if you can’t determine how to make use of the courting platform from a few clicks, you might need a want to go away it and find one thing simpler to make use of. It is simple to search out thousands of legit actual mail order brides on-line, but the factor is to join the appropriate platform. Why Are Asian Women So Well-liked Amongst Mail Order Brides? You may surprise what it’s that attracts males in the direction of Asian brides. Where do you go to find an Asian spouse? You might also desire this relationship site if you happen to get pleasure from casually flirting online to find the one for you. What makes this relationship site so unique is its person interface and its capability to filter one of the best outcomes when you place in your requirements. Then, you need to make it possible for all of the financial transactions are safe, and that your relationship venue has an effective anti-rip-off coverage. Oriental ladies have attributes of being tremendous attentive to their husbands and are known to be supportive, not just for you however for [url=https://idateasiareview-blog.tumblr.com/]idateasia.com[/url] your loved ones as a complete.

A number of on-line sources have discovered a rising potential for relationships between Western males and Asian ladies for lengthy-lasting relationships. In contrast to males from Asian international locations, Western guys are more loyal and delicate. Household values are essential for these girls. The Asian ladies listed below are additionally tremendous family-oriented and correctly educated if you’re looking for someone to have a household with. Asian wives are too standard today. However I feel there’s enough AMWF today that white ladies have already stuffed within the blank. A large body of sociological analysis has discovered that Asian men stay “at the underside of the courting totem pole.†For instance, among younger adults, Asian males in North America are more likely than men from other racial teams (for instance, white men, Black males and Latino men) to be single. And really, the record can go on and on, as Japan, South Korea, and different Asian nations have loads of beautiful Asian beauties. Then, each site has quite a lot of things you can take into consideration.

If there are loads of annoying adds, it is going to be yet one more purpose for you to depart the site. After all, there is a lack of emancipation in Asian cultures. In the United States census they are a subcategory of Asian Individuals, though particular person racial classification is based on self-identification and the categorization is “not an try to define race biologically, anthropologically, or genetically”. Students from numerous Southeast Asian nations have instructed for the establishment of an inclusive Southeast Asian body that may cater to the gaps of the area’s actions in UNESCO as the vast majority of nations within the area are underperforming in the majority of the lists adopted by UNESCO, notably the World Heritage List. The probable reason is the qualities that an Asian spouse has, that are super engaging to men typically, and by this, we don’t mean their patriarchal mindset. If the looks and colors of the dating websites are irritating, you won’t need to spend your time there, in addition to pay your money to the individuals who couldn’t make it snug for you. For those who think that the appearance isn’t necessary – overlook it, as a result of on this case what it looks like is really vital.

Trackbacks:

Leave a comment