Editor’s note: As part of its effort to explore the root causes of the current crisis in trust in the media, the Knight Foundation is commissioning a continuing series of white papers from academics and experts. Here’s what they’ve learned so far. (Disclosure: Knight has also been a funder of Nieman Lab.)

Institutional trust is down across the board in American society (with a few notable exceptions, such as the military). But trust in the media is particularly troubling, plummeting from 72 percent in 1976 to 32 percent in 2017. There are many reasons for this decline in trust, writes Yuval Levin, but one of the problems is that the rise of social media has pushed journalists to focus on developing personal brands:

“This makes it difficult to distinguish the work of individuals from the work of institutions, and increasingly turns journalistic institutions into platforms for the personal brands of individual reporters. When the journalists’ work appears indistinguishable from grabbing a megaphone — they become harder to trust. They aren’t really asking for trust.”

Humans are biologically wired to respond positively to information that supports their own beliefs and negatively to information that contradicts them, writes Peter Wehner. He also points out that beliefs are often tied up with personal identity, and that changing beliefs may put a people at risk of rejection from their communities.

As people increasingly rely on social media platforms to get information, they are at the mercy of opaque algorithms they don’t control, write Samantha Bradshaw and Philip Howard. These algorithms are optimized to maximize advertising dollars for social media platforms. Since people tend to share information that provokes strong emotions and confirm what they already believe, “The speed and scale at which content “goes viral” grows exponentially, regardless of whether or not the information it contains is true.”

The conventional wisdom these days is that we’re all trapped in filter bubbles or echo chambers, listening only to people like ourselves. But, write Andrew Guess, Benjamin Lyons, Brendan Nyhan and Jason Reifler, the reality is more nuanced. While people tend to self-report a filtered media diet, other data show that many people do not engage in political information much at all, instead choosing entertainment over news. But, that doesn’t mean there is no problem. “[P]olarized media consumption is much more common among an important segment of the public — the most politically active, knowledgeable, and engaged. This group is disproportionately visible online and in public life.”

People may be predisposed to hold on to beliefs that are agreeable to them, but, they also are more likely to believe a correction if it comes from a source they think would promote an opposing opinion. However, offering a simple correction alone rarely works. Finally, even when people accept corrections, other studies show a taint persists — called a “belief echo” — by which the false belief continues to affect attitudes.

There’s no one-size-fits-all way to communicate complicated information, write Erika Franklin Fowler and Natalie Jomini Stroud, but science can help. “Different goals require different types of information. If we know people don’t have the time or motivation to pay attention to in-depth information on all issues, then we might encourage the use of endorsements or other cues from trusted sources. If we seek to increase participation, it is helpful to encourage citizens to join groups and to consume like-minded information, but if we want to encourage empathy or deliberation, we need more balanced information that compassionately represents others. Sometimes people learn best through experiences, or getting issue-oriented information from organizations they trust.”

Americans don’t know things because they can’t be bothered to know them, the conventional wisdom says. But, lack of motivation isn’t the whole story. News stories often cover breaking news without contextual information that supply basic facts about a particular issue, whether it’s the federal budget or climate change. Experiments show that people can be open to information about complex subjects if it’s provided within context of a news report.

Emily Thorson, a professor of political science, conducted an experiment where she provided two versions of a news story about the federal budget, one with contextual information provided in a box and another without. She found that people who read the version of the article with the contextual message, when questioned, reported more accurate information about the budget than those who did not.

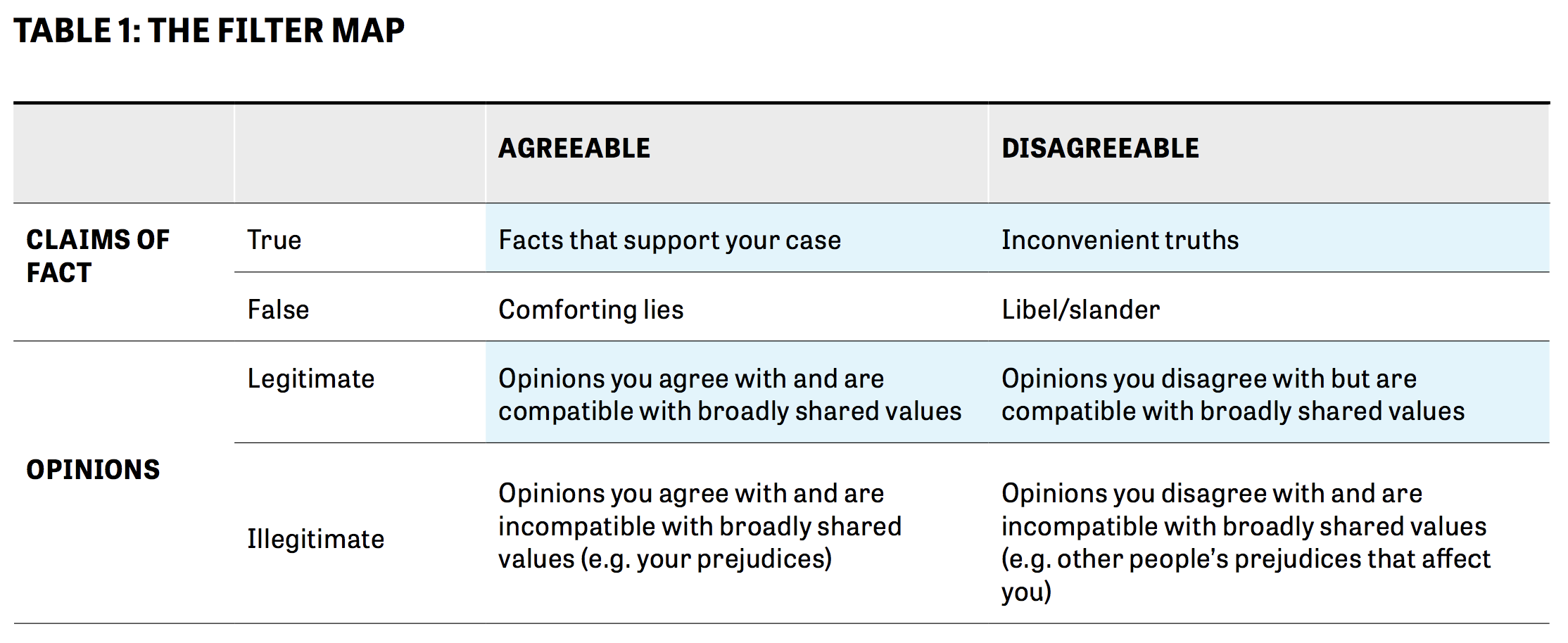

We need a way to think about information that goes beyond “agreeable” or “disagreeable.” “I object more fundamentally to the notion that all mass affirmation is always bad, and its corollary, that unwanted or unplanned encounters are always good. In true academic fashion, I will argue that it depends: each can sometimes be good and sometimes bad,” writes Deen Freelon.

Freelon proposes a different way to think about the information that we consume: a three-dimensional “filter map”:

“Misinformation isn’t new,” write Danielle Allen and Justin Pottle, “and our problem is not, fundamentally, one of intermingling of fact and fiction, not a confusion of propaganda with hard-bitten, fact-based policy. Instead, it’s what we now call polarization, but what founding father James Madison referred to as “faction.”

Madison wasn’t concerned about disagreement in and of itself. Rather, he thought about structural ways to bring people together despite those differences. He advocated for a large republic with a relatively small legislature in which each representative represented a wider variety of groups and individuals.

Thanks to societal challenges such as the disappearance of many local and regional newspapers, a growing concentration of people living in ideological groupings, the loss of credibility of many colleges and universities among conservatives have all contributed to undermine “the institutions whose job it is to broker the debate within the citizenry about what different people see as credible or incredible.”

Allen and Pottle suggest a number of strategies to bring Americans together in united experiences, such as instituting a national service requirement, establishing geographic lotteries for elite learning institutions, and reviving local journalism with philanthropy.

Nancy Watzman is editor of Trust, Media & Democracy for the Knight Commission on Trust, Media and Democracy, where this piece was originally published. The commission is developing a report and recommendations on how to improve American democracy, and it is gathering public comments for its work. Here’s how to submit yours.