During the second week of February, the Fort Worth Star-Telegram published a column that turned out to be wrong. What happened next was the catalyst for an experiment in journalistic transparency that we believe has huge potential: moving corrections along the same social-media paths as the original error.

As Star-Telegram columnist Bud Kennedy explained in a subsequent piece — the original was taken down — he’d based his commentary on what appeared to be solid reporting from another newspaper, which had based its story on government records that were, in fact, incorrect. (Read Kennedy’s column — entitled “A columnist’s apology to Dan Patrick: The tax records were wrong. So was I” — to get the context.)

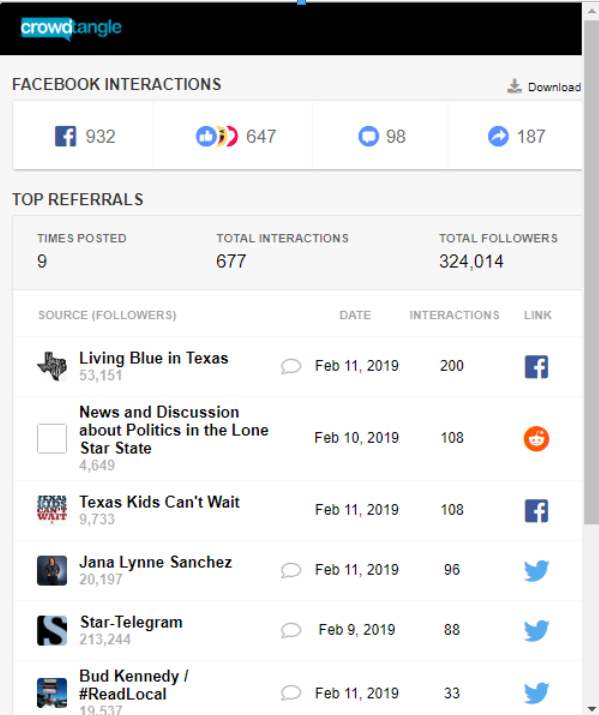

The original piece, harshly critical of a Texas politician, was shared by a number of people on social media. Some of them had substantial followings.

Kennedy’s column-length correction — in which he implored his readers to spread the word that the original was incorrect — caught the attention of David Farré in the newsroom of The Kansas City Star, a sister news organization in the McClatchy news group. We at the News Co/Lab have been working with three McClatchy newsrooms, including Kansas City’s, on embedding transparency and community engagement into the journalism.

One essential element of transparency is doing corrections right, and the Star has been updating and upgrading corrections for the 21st century. In the age of analog traditional media, the process was flawed by definition, because corrections in newspapers were typically published on Page 2 days or even weeks after the original error, typically without the context a reader would need to understand exactly what had occurred. (TV news rarely corrected its errors at all, then and now.)

In theory, corrections in a digital age can be much more timely and useful. We can fix the error right in the news article (or video or audio) and append an explanation, thereby limiting the damage, because people new to the article will get the correct information.

But what about the people who’ve already seen the story with the misinformation? That’s a particularly tricky question when so much news spreads these days via social media and search. What if we could do more than limit future damage? What if we could repair at least some of the previous damage by notifying people who saw the error? The need for this has been growing and was highlighted in the recently released report “Crisis in Democracy: Renewing Trust in America” from the Knight Commission on Trust, Media and Democracy. (See Chapter 5.)

The News Co/Lab and the Star have had this on our agenda for a while now. A newsroom team — including Farré and led by Eric Nelson, growth editor for McClatchy’s Central region, which includes the Kansas City and Fort Worth properties — had been looking for a way to test the idea. The Star-Telegram correction, the newsroom team thought, was an ideal opportunity to chase a mistake via social media.

Nelson asked Kennedy and his editors if we could use the correction column to give this experiment a try. They were happy to do it. Here’s some of what happened.

The Star uses Facebook’s CrowdTangle social-media monitoring tool in several ways. One particularly useful Chrome browser plugin lets editors discover who’s sharing the Star’s stories on Facebook and Twitter.

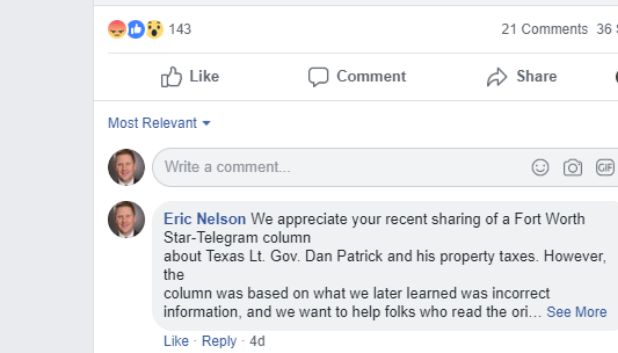

Kennedy had posted the correction to his own Twitter and Facebook feeds and sent direct messages to several people on Twitter. Eric and I went to Facebook and let the people who’d posted the original column know that it was wrong, including a link to the correction column and a request to share that, too. Here’s an example of using messaging:

And here’s an example of posting a correction directly into the replies (we did both):

The top sharers have substantial followings, and a number of people in their networks re-shared their posts. We could have gone further; chasing all sharers down would have been possible, but seemed to have questionable value when we balanced time against likely effect.

In our limited test, the results were mixed — at least from the point of view of wanting to make sure truth catches up with falsehoods. At least one person deleted the share of the incorrect column. One said directly: No thanks. Others didn’t respond. The topic may have played a role: Politics tends to be discussed by people who consider themselves members of partisan teams, and that may well make them more reluctant than others to tell people they shared something that was wrong.

The experiment did show that it’s possible to use social networks to spread corrections along the same paths the original errors moved. And we think this is potentially a very big deal.

But it’s plainly not a simple process to push out corrections this way. It’s time-consuming, and in an era when newsroom staffs are stretched to near the breaking point, it’s unrealistic to ask them to add this do-it-by-hand procedure to their jobs.

Who could help us more? Facebook, for starters. We’ve asked people there to automate this notification process and put it in CrowdTangle: Click a button, fill in a field that gives the details of the mistake and link to the correction, click another button, and the people with large followings who shared the original are notified. (Facebook knows about this experiment and is intrigued by what we’re doing. Stay tuned.)

We’d like to see the same kind of functionality in Twitter and other social media channels. The platforms have everything they need to help corrections catch up with mistakes, and it would be to everyone’s benefit if they’d deploy the tools to make it happen.

What about search? A lot of what people see is based on search results. That’s on Google, which not only dominates search but also — via its advertising clout and massive data collection — knows far better than news organizations who’s reading what. (Or at least who’s clicking on what.) We need Google’s help for sure.

We can provide some help ourselves, and that’s what we’re doing.

The best fact checkers are the audience. Look at Dan Rather’s blunder. But the best people to write convincing corrections are the ones who made the original mistake — if they have the integrity to do it.

To that end, the News Co/Lab is collaborating with another of our partners, the Trust Project, which is developing “transparency standards that help [audiences] easily assess the quality and credibility of journalism.” We’re working on the project’s WordPress plugin that helps news organizations implement Trust Project functionality on their sites. Our contribution will include, among other things, an invitation for readers of these sites to subscribe to corrections. Every news organization should embed this kind of functionality into its content management system, whether that’s WordPress or something else.

Corrections, incidentally, aren’t the only valuable use for tools and approaches of this kind. Major updates to articles — especially where the situation is changing rapidly — are another perfect use for this kind of thing. Again, however, the key word is tools, because we need the help of the platforms where so much of the news spreads in the first place.

Still, we need everyone’s help in the end. We’re human, and therefore we make mistakes. It’s everyone’s responsibility to correct public errors via the same channels. If we could embed that principle into all of our online activity, we’d all be better off.

Dan Gillmor is cofounder of the News Co/Lab at Arizona State’s Walter Cronkite School of Journalism and Mass Communication, where a version of this piece was published.