In December 2016, Facebook enlisted a handful of U.S.-based news organizations (ABC News, Snopes, PolitiFact, FactCheck.org, and the AP) to help stem the flow of false information on the platform. Over time, it’s expanded these third-party fact-checking partnerships: It now has more than 50 partners globally, fact-checking in 42 languages.

Full Fact, the independent U.K. fact-checking organization, signed on as one of Facebook’s third-party fact-checking partners in January. (All partners must be members of Poynter’s International Fact-Checking Network, though that hasn’t completely prevented disputes over who should qualify to be a fact-checker; some original partners like Snopes have dropped out.) Six months in, the organization has released a report about its experience so far — what it’s learned, what it likes, and what it thinks needs to change.Full Fact’s two major concerns about the fact-checking program are scale and transparency — ongoing complaints among Facebook’s partners. “Facebook’s focus seems to be increasing scale by extending the Third Party Fact Checking Program to more languages and countries,” the report notes. “However, there is also a need to scale up the volume of content and speed of response” — being available in a lot of countries isn’t enough if individual country partners are only able to skim the surface of misleading content.

And — like other third-party fact-checking partners — Full Fact wants Facebook “to share more data with fact-checkers, so that we can better evaluate content we are checking and evaluate our impact.” It’s not very satisfying to feel as if your fact-checks are falling into a black hole, where you’re unsure how many people will ever see them or how much of a dent you’re making.

During the six-month period, Full Fact published 96 fact-checks as part of its participation in Facebook’s program, and Facebook paid it $171,800 (“the amount of money that Full Fact is entitled to depends on the amount of fact-checking done under the program”). A little back-of-the-envelope math shows this works out to about $1,790 per fact-check, with some obviously requiring more work than others. (In the report, Full Fact describes building networks with medical and health-related organizations, police departments, academics, and so on. If you’re keeping track of how much Facebook’s fact-checking partners make and trying to guess rates, note that France’s Libération received $245,000 for 290 fact-checks in 2018.)

Here are some of the report’s findings, observations, and recommendations.

I hadn’t seen this much detail previously about what the “queue” entails, so quoting liberally here:

Fact checkers working on the Third Party Fact Checking program are provided by Facebook with a “queue” of content (such as text posts, images, videos and links) that it has identified as possibly false. Each fact checker’s queue is generated specifically for the territory they operate in; our queue is supposed to prioritize UK-centric content.

We do not know exactly what metrics Facebook uses to determine what goes into the queue, but we do know that it is a combination of Facebook users flagging the content as suspicious, and Facebook’s algorithms proactively identifying other signals that might suggest it is false (such as, for example, comments underneath saying “this is fake”).

The queue also includes information on the total number of shares each post has received, and the date it was first shared on. (Since the period this report covers, while it was being written, Facebook has also added information on the number of users who flagged the content, and the number of shares in the previous 24 hours.)

Fact checkers can bookmark items from the queue, to examine later and eventually attach any published fact checks to.

We are also able to proactively add posts to the queue which we have found through our own monitoring and fact checking, for example website links or Facebook posts. The posts we add must be rated either “false” or “mixture.” So far we have added one post on health: a Facebook status with almost 60,000 shares claiming a tampon could be put in a stab wound. We added another on whether there was a legal ban on British media reporting on the Yellow Vest protests in France.

From our experience so far, the majority of items in the queue are not things that we either would or could fact check: they may be statements of opinion rather than factual claims, news articles about widely accepted events, or random links that are nothing to do with factual claims at all (there was a period when there were a surprising number of Mr. Bean videos). This does not seem unusual to us; it is roughly what we would expect at this stage since launch, especially as user behavior in terms of flagging, and the precision of Facebook’s algorithms in terms of identifying useful signals, may both need time to adjust.

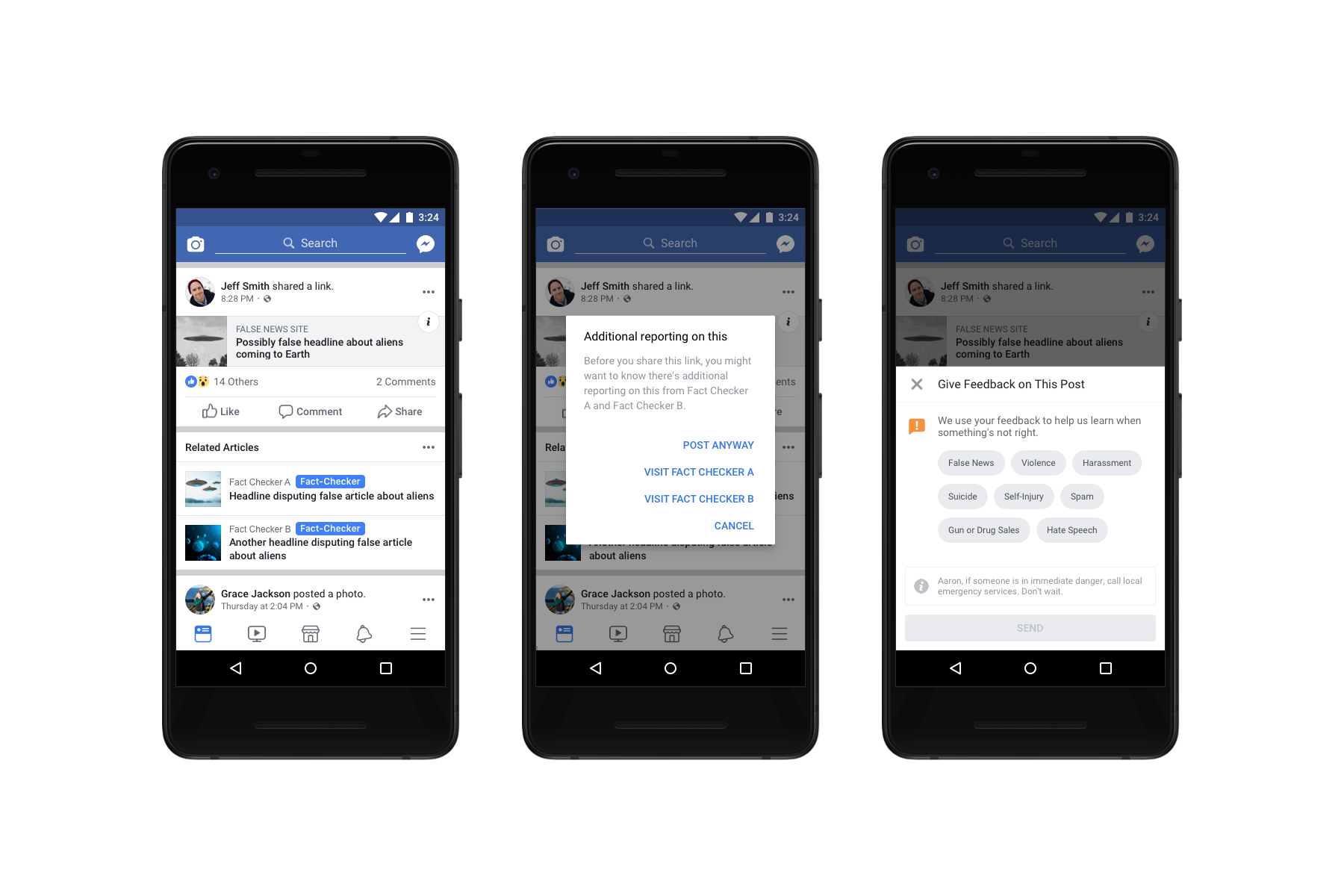

Full Fact can then attach its own (previously written or new, but must have been published to its own website) fact checks to posts in the queue, with one of nine possible ratings: False, Mixture, False Headline, True, Not eligible, Satire, Opinion, Prank Generator (“websites that allow users to create their own ‘prank’ news stories to share on social media sites’), and Not Rated. Facebook “may” then take additional action — like reducing the distribution of the post if it’s been rated False, False Headline, or Mixture. At that point, users who try to share one of these posts will receive a notification about “additional reporting on this,” with a link to the fact-check on Full Fact’s site, and will have to press “continue” if they still want to share the link.

Of Full Fact’s 96 published Facebook fact-checks, 59 rated the claim false, 19 rated the claim mixture, seven opinion, six satire and five true — and “there was no situation that we treated as a ‘Major Incident’ (a breaking news event such as a terrorist attack requiring urgent fact-checking) in this period.”

Full Fact is enlisting help in fact-checking public health issues — which often pop up on Facebook, and which are beyond its in-house expertise. “We are concerned that we are finding areas where it is hard to find sources of impartial and authoritative expert advice, particularly from organizations that are capable of responding in time to be relevant to modern online public debate,” the authors write.

Most of the false health-related claims in Full Fact’s queue are related to vaccines, but Full Fact also saw others — like a claim that “if you are suffering a heart attack, you should cough repeatedly in order to keep your heart beating” (false) and a claim that pregnancy tests can be used to check for testicular cancer (false). Full Fact hopes to work with Facebook to “identify and prioritize more particularly harmful content,” especially content relating to public health — the report’s authors realize that they’re only seeing a sliver of the harmful fake content out there.

Full Fact has to work within Facebook’s nine pre-defined categories — sometimes selecting “satire” or “opinion,” even if those categories don’t match the post’s content exactly, so that the post’s distribution isn’t reduced. “For a number of the posts we fact-checked, we found the existing rating system to be ill-suited.” For instance:

Most people wouldn’t call the video purporting to show a police officer taking drugs satire, but that is how we rated it. The video was filmed as a joke, so giving it a rating that would damage its distribution seems inappropriate. Some commenters and the person who’d posted it (who wasn’t the original creator) did seem to think it was legitimate, and it has been shared over 34,000 times. Satire seemed the best rating, as its distribution would be unaffected and it would acknowledge in some way that the content was created for humor rather than to mislead. Going forward, rating jokes (or more widely people messing around online to be funny) as satire is not ideal.

The organization recommends the introduction of new categories, such as “unsubstantiated” (“in some cases, we cannot definitively say something is false, but equally can find no evidence that it is correct” — one example was a post claiming that a Swedish woman was attacked in a nightclub by a Muslim migrant) and “more context needed”:

The highly specialist — as well as occasionally ambiguous or provisional — nature of much medical advice is one reason why we are recommending to Facebook that a “context needed” rating might be necessary. For example, we often see posts that discuss the listed side effects of various medicines, in a way that implies they are inherently dangerous. These may be technically accurate, but potentially misleading without the context of relative risks and regulatory processes.

Case study: This post lists potential side effects of one brand of contraceptive pill. Most of them are accurate, in the sense that they are listed as potential side effects, but it could well be interpreted in ways that overstate the risk. We rated it as “true,” as we did not feel it was inaccurate enough to justify even a “mixture” rating; however, we believe that a “more context” rating would have been more appropriate.

Full Fact also wants humor ratings beyond “satire” or “prank,” writing, “there should also be a rating for the broader category of non-serious, lighthearted or humorous posts that people might understand. Like the ‘satire’ rating, this should not reduce the reach of the post. It is not our job to judge the quality of people’s senses of humor.”

Finally, many pieces of content don’t fall into just one bucket; Full Fact recommends enabling fact-checkers to apply multiple ratings to the same post.

The potential to prevent harm is high here, particularly with the widespread existence of health misinformation on the platform. Facebook has already taken some steps toward using the results of the program to influence content on Instagram, or Instagram images that are shared to Facebook. However, directly checking content on Instagram is not yet a part of the program.

Full Fact submitted an early version of the report to Facebook, and Facebook responded, in part: “We know there’s always room to improve. This includes scaling the impact of fact-checks through identical content matching and similarity detection, continuing to evolve our rating scale to account for a growing spectrum of types of misinformation, piloting ways to utilize fact-checkers’ signals on Instagram and more. We also agree that there’s a need to explore additional tactics for fighting false news at scale.”

You can read the full report, which includes many more observations and recommendations, here.