The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

In the EU, junk is replacing news from the government, not news from the mainstream media. People in France, the UK, and Germany shared plenty of “professionally produced information from media outlets” on Twitter during the 2017 elections — but they also shared a lot of “junk news,” at the expense of content from political parties and the government.

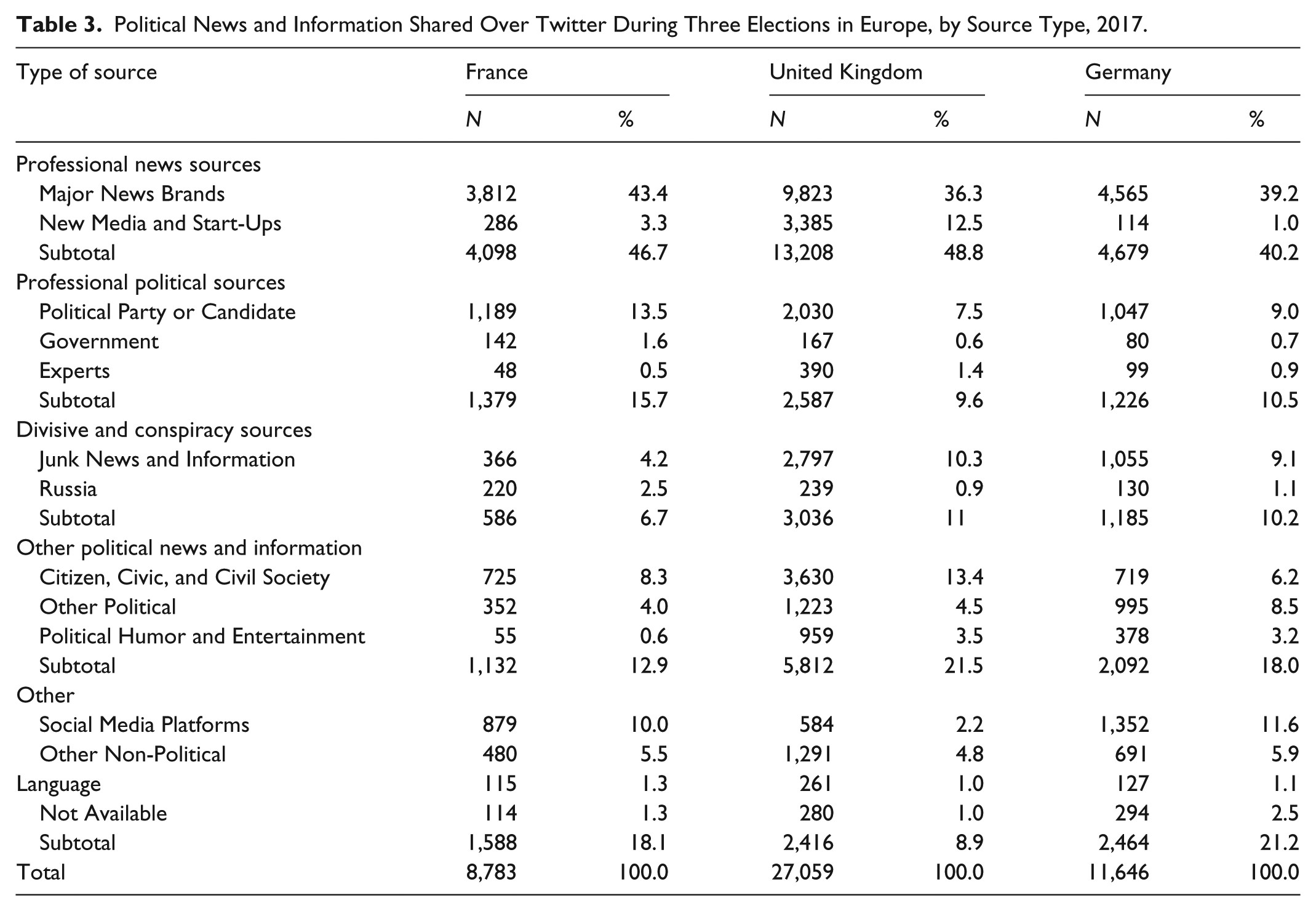

Lisa-Maria Neudert, Philip Howard, and Bence Kolanyi analyzed more than four million tweets from three elections and tracked and categorized the 5,158 sources they linked to.

The categorization was thoughtfully done. There were five categories: 1) Professional News and Information (including major news brands and legit digitally native sites and startups; tabloids were also included in this category, unless they “failed on at least three out of five criteria for junk news”); 2) Professional Political Sources, including political parties and candidates, government websites, and expert whitepapers; 3) Divisive and Conspiracy sources, including junk news and information (the researchers classified “junk news” as meeting at least three of the five following criteria: “(1) professionalism, where sources fall short of the standards of professional journalistic practice including information about authors, editors, and owners; (2) style, where inflammatory language, suggestion, ad hominem attacks and misleading visuals are used; (3) credibility, where sources report on unsubstantiated claims, rely on conspiratorial and dubious sources, and do not post corrections; (4) bias, where reporting is highly biased and ideologically skewed and opinion is presented as fact; and (5) counterfeit, where sources mimic established news publications in branding, design, and content presentation”) and content created by state-funded Russian sources; 4) Other Political News and Information (including content produced by independent citizens, civic groups, watchdog groups, lobby and interest groups, as well as humor and entertainment); and 5) Other (URLs that referred to content on other social media platforms, or spam).

Here’s the breakdown:

And here are the three main findings:

First, our analysis demonstrates that in France, for every one link to Junk News Sources, there were seven links to content produced by professional news organizations. In the United Kingdom, the ratio was 5 to 1, and in Germany, the ratio was 4 to 1. The low proportion of junk news in France may be related to national political communication cultures which traditionally emphasize political debate in everyday life and social systems.

Second, only a small number of domains were operated by known Russian sources during the time of the elections despite mainstream Russian outlets and their respective national offshoots having a substantial following in France, the United Kingdom, and Germany.

Third, across national elections, the category of Professional News Sources was the largest category of sources shared during the elections that was at least roughly double as large as the second largest category of sources proportionally. Although many have suggested we are in “post-truth” area with declining levels of trust in the media, this finding suggests that social media users in Europe are still widely relying on traditional news media to inform their political decision making.

So the real casualty here wasn’t professionally produced news — it was content from political parties, government agents, and experts:

In France, for every one link to Divisive and Conspiratorial Sources, social media users shared more than 2.4 links to information coming directly from political parties, government agencies, or acknowledged experts. One might consider that ratio already too low. But in the United Kingdom, the ratio was the inverse, such that for each link to Divisive and Conspiratorial Sources, there was 0.8 links to sources from traditional political actors, and in Germany, the proportions were at par with a one to one ratio between these kinds of sources.

Your intuition is probably wrong. In a study by Rutgers’ Chris Leeder, college students were tested on their ability to accurately identify fake versus real news stories. Here are some of the differences between those who performed well and those who didn’t:

In the high-performing group, 5 participants reported searching for other sources (26.32%), 4 reported using Google to search the topic (21.05%), and 4 reported using Snopes or fact-checking sites (21.05%). In the medium-performing group, 3 individuals reported searching for other sources (15.79%), 2 using Google (10.53%) and none reported using Snopes. No individuals in the low-performing group reported using any of these strategies. Additionally, 3 participants in the low-performing group reported that they based their decisions on “intuition” or “instinct” (12.00%) while no members of the other groups reported that strategy. Thus, while reported use of effective verification strategies overall was low, participants in the high-performing group were more likely to employ them…

While reliance on social media was high across all groups, the response data shows that a larger percentage of the low-performing group selected social media as a news source (92.00%) compared to the high-performing group (84.21%) and the medium-performing group (89.47%). In contrast, a larger percentage of the high-performing group selected national TV/cable news sites (57.89%) compared to the medium-performing (36.84%) and the low-performing group (40.00%). A larger percentage of the medium-performing group selected major newspaper sites as a news source (47.36%) than the higher-performing (36.84%) or the low-performing (40.00%) groups. Although the number of cases is small, the results suggest a difference in behavior between the groups regarding use of news sources. One possible explanation for this result is that exposure to news presented by outlets which follow traditional journalistic standards (national TV/cable news networks and major newspapers) may help participants learn to accurately identify fake news.

Not just detecting fake news, but explaining why it’s fake. There are various algorithms out there to detect fake news, but Penn State researchers came up with one that also explains why the news is fake. It relies on user comments:

In their study, the researchers built an explainable fake-news detection framework, which they call dEFEND (Explainable FakE News Detection). The framework consists of three components: (1) a news content encoder, to detect opinionated and sensational language styles commonly found in fake news; (2) a user comment encoder, to detect activities such as skeptical opinions and sensational reactions in comments on news stories; and (3) a sentence-comment, co-attention component, which detects sentences in news stories and user comments that can explain why a piece of news is fake.

The new detection algorithm designed and developed in this novel approach has outperformed seven other state-of-the-art methods in detecting fake news, according to the researchers.

“Among the users’ comments, we can pinpoint the most effective explanation as to why this [piece of news they are reading] is fake news,” explained [Dongwon] Lee. “Some users expressed discontent but others provide particular evidence, such as linking to a fact-checking website or to an authentic news article. These techniques can concurrently find such evidence and present it to the user as potential explanation.”