The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

“People should be able to hear from those who wish to lead them, warts and all.” Facebook’s announcement this week that it’s banning deepfakes ahead of the 2020 election didn’t exactly leave people cheering, especially since it also repeated that it will continue to allow politicians and political campaigns to lie on the platform (a decision “largely supported” by the Trump campaign and “decried” by many Democrats, in The New York Times’ phrasing).

“People should be able to hear from those who wish to lead them, warts and all,” Facebook said in a blog post.

Big win for Trump. A decision by Facebook to avoid antagonizing the president — and risking breakup — that will have huge consequences in the 2020 campaign. https://t.co/soqvMxslZ5

— Nick Confessore (@nickconfessore) January 9, 2020

Federal Election Commissioner Ellen Weintraub:

I am not willing to bet the 2020 elections on the proposition that Facebook has solved its problems with a solution whose chief feature appears to be that it doesn’t seriously impact the company’s profit margins.

/3

— Ellen L Weintraub (@EllenLWeintraub) January 9, 2020

But this shouldn’t be framed as a partisan issue, argued Alex Stamos, the former chief security officer at Facebook who is now at Stanford.

I'm disappointed with the Facebook ad decision. Not a smart move for the company; targeting limits and a minimal standard on claims about opponents would represent a defensible, non-partisan and helpful position.

From chat with @mathewi on this:https://t.co/9CAlO0xryK

— Alex Stamos (@alexstamos) January 9, 2020

I also disagree with both Facebook’s and the media’s framing of this as a partisan issue. Such framing almost guarantees that Facebook will not make a proactive move and significant pressure will be applied to other companies to do the same.

[Insert Tolkien/Rawls mashup here]

— Alex Stamos (@alexstamos) January 9, 2020

Facebook is also adding a feature that lets people choose to see fewer political ads. From the company’s blog post:

Seeing fewer political and social issue ads is a common request we hear from people. That’s why we plan to add a new control that will allow people to see fewer political and social issue ads on Facebook and Instagram. This feature builds on other controls in Ad Preferences we’ve released in the past, like allowing people to see fewer ads about certain topics or remove interests.

it will be interesting to see the implementation of these tools. but i do wonder how many people know where even to find the ad transparency screen. historically, and totally anecdotally, i've had to walk so many friends and colleagues through this. https://t.co/JTDEyepJpF

— Tony Romm (@TonyRomm) January 9, 2020

Meanwhile, in the week that Facebook banned deepfakes — but not the much more common “cheapfakes” or other kinds of manipulated media — Reddit banned both. The updated rule language:

Do not impersonate an individual or entity in a misleading or deceptive manner.

Reddit does not allow content that impersonates individuals or entities in a misleading or deceptive manner. This not only includes using a Reddit account to impersonate someone, but also encompasses things such as domains that mimic others, as well as deepfakes or other manipulated content presented to mislead, or falsely attributed to an individual or entity. While we permit satire and parody, we will always take into account the context of any particular content.

Key that the new Reddit policy includes “Deepfakes and other manipulated content” — unlike the fraught @Facebook policy from earlier this week, which limits itself solely to AI-modified or synthesized videos. https://t.co/xMSSArXvCk

— Lindsay P. Gorman (@LindsayPGorman) January 9, 2020

TikTok also clarified some of its content moderation rules this week.

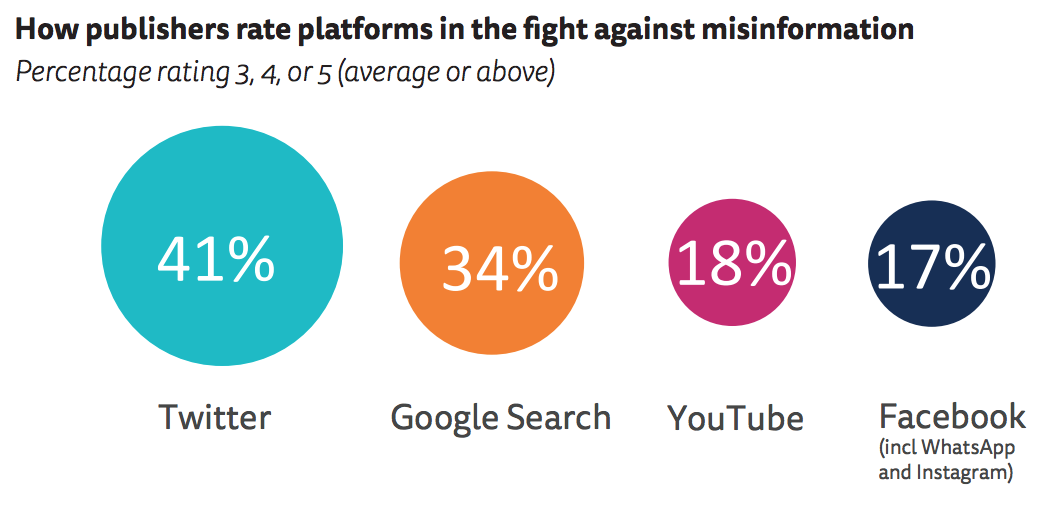

Most trusted: Twitter? Also this week, the Reuters Institute for the Study of Journalism released a report surveying 233 “media leaders” from 32 countries. They believe that, of the platforms, Twitter is doing the best job of combatting misinformation, and Facebook is doing the worst. Still, even Twitter had only 41 percent of respondents saying the job they were doing was “average” or better:

An argument that YouTube would do just as much damage without its algorithm. Becca Lewis, who researches media manipulation and political digital media at Stanford and Data & Society, argues in FFWD (a Medium publication about online video) that “YouTube could remove its recommendation algorithm entirely tomorrow and it would still be one of the largest sources of far-right propaganda and radicalization online.”

“When we focus only on the algorithm, we miss two incredibly important aspects of YouTube that play a critical role in far-right propaganda: celebrity culture and community,” Lewis writes. From her article:

When a more extreme creator appears alongside a more mainstream creator, it can amplify their arguments and drive new audiences to their channel (this is particularly helped along when a creator gets an endorsement from an influencer whom audiences trust). Stefan Molyneux, for example, got significant exposure to new audiences through his appearances on the popular channels of Joe Rogan and Dave Rubin.

Importantly, this means the exchange of ideas, and the movement of influential creators, is not just one-way. It doesn’t just drive people to more extremist content; it also amplifies and disseminates xenophobia, sexism, and racism in mainstream discourse. For example, as Madeline Peltz has exhaustively documented, Fox News host Tucker Carlson has frequently promoted, defended, and repeated the talking points of extremist YouTube creators to his nightly audience of millions.

Additionally, my research has indicated that users don’t always just stumble upon more and more extremist content — in fact, audiences often demand this kind of content from their preferred creators. If an already-radicalized audience asks for more radical content from a creator, and that audience is collectively paying the creator through their viewership, creators have an incentive to meet that need…

All of this indicates that metaphor of the “rabbit hole” may itself be misleading: it reinforces the sense that white supremacist and xenophobic ideas live at the fringe, dark corners of YouTube, when in fact they are incredibly popular and espoused by highly visible, well-followed personalities, as well as their audiences.