The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

— Across 40 countries, 56 percent of respondents say they’re worried about “what is real and what is fake on the internet.”

— Across countries, people were most likely to see domestic politicians as responsible for spreading “false and misleading information” online (40 percent); only 13 percent of people across countries said that they were most concerned about false information being spread by journalists. But in highly polarized countries like the United States, the situation is different:

Left-leaning opponents of Donald Trump and Boris Johnson are far more likely to blame these politicians for spreading lies and half-truths online, while their right-leaning supporters are more likely to blame journalists. In the United States more than four in ten (43%) of those on the right blame journalists for misinformation — echoing the President’s anti-media rhetoric — compared with just 35% of this group saying they are most concerned about the behavior of politicians. For people on the left the situation is reversed, with half (49%) blaming politicians and just 9% blaming journalists.

Facebook is widely seen as the most concerning platform for fake news spread, though “in parts of the Global South, such as Brazil, people say they are more concerned about closed messaging apps like WhatsApp (35%). The same is true in Chile, Mexico, Malaysia, and Singapore. This is a particular worry because false information tends to be less visible and can be harder to counter in these private and encrypted networks.”

— People widely believe that the media should report on “potentially dubious statements from politicians”:

In almost every market, people say that when the media has to deal with a statement from a politician that could be false, they would prefer them to “report the statement prominently because it is important for the public to know what the politician said” rather than “not emphasize the statement because it would give the politician unwarranted attention”…

As well as being consistent across almost all countries, this general view appears to be consistent across a range of different socio-demographic groups like age, gender, and political leaning. Even in the U.S., where some might assume partisan differences due to different political styles, a majority of those on the left (58%) and right (53%) would prefer potentially false statements to be reported prominently — though perhaps for different reasons.

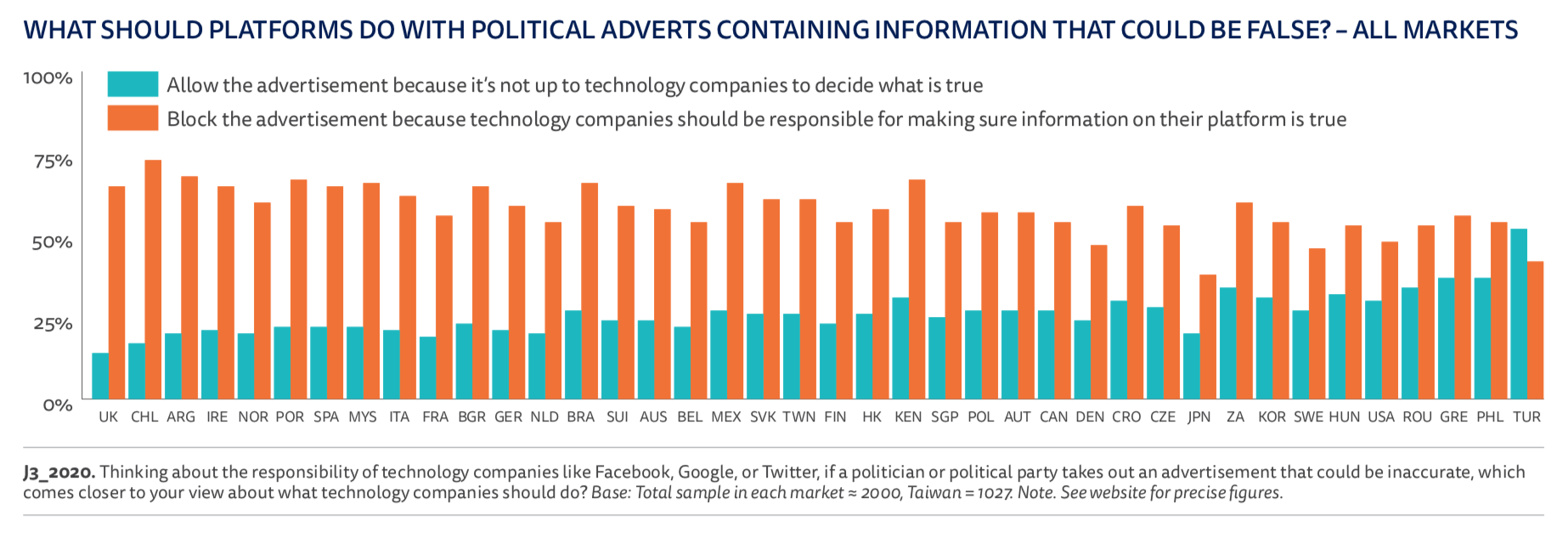

People also widely believe that platforms should block political advertisements that could contain false information: The report found “little evidence of widespread concern over letting technology companies make decisions about what to block and what to allow.” Note, though, that in the U.S. the “block” and “don’t block” groups are closer together than they are in most of the other countries surveyed:

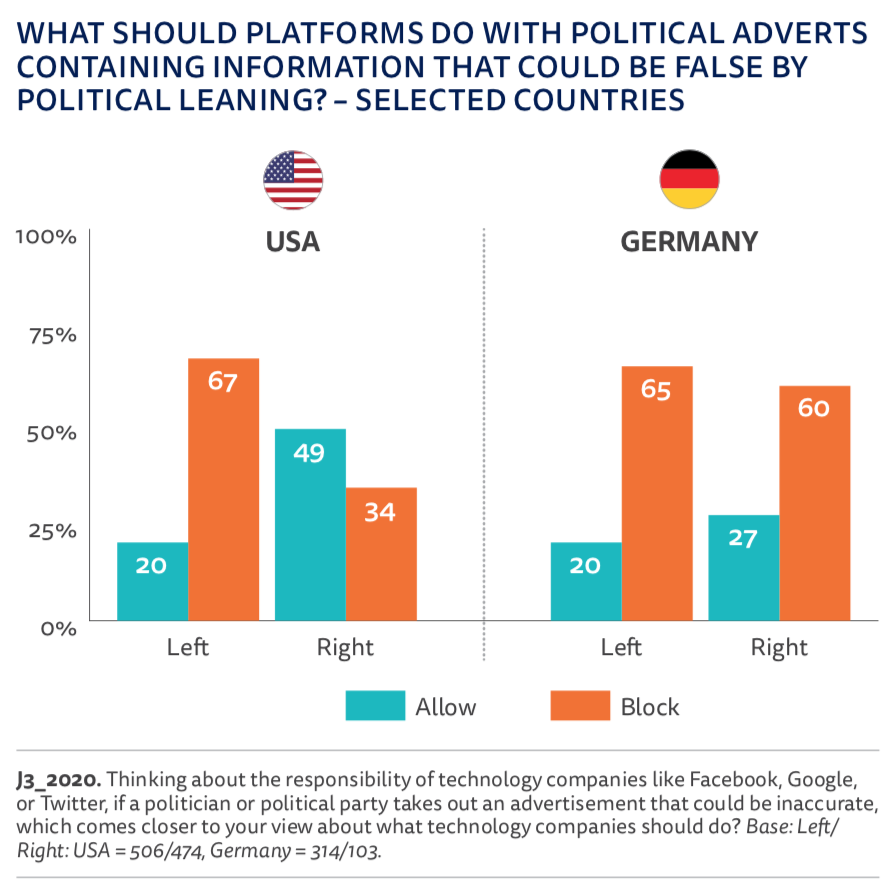

Partisanship matters here, again: In the U.S., people whose political beliefs lean to the right are more likely to think that political ads that might contain false information should be allowed.

By the way, Facebook said this week that it and Instagram will allow users to “opt out of seeing social issue, electoral or political ads from candidates or political action committees.” Mike Isaac wrote in The New York Times:

The move allows Facebook to play both sides of a complicated debate about the role of political advertising on social media ahead of the November presidential election. With the change, Facebook can continue allowing political ads to flow across its network, while also finding a way to reduce the reach of those ads and to offer a concession to critics who have said the company should do more to moderate noxious speech on its platform.

So instead of holding a line on politicians paying to spread lies on its platform, @facebook will allow “some” Americans to turn lies off?@twitter’s outright ban was braver.@finkd: Please ban microtargeting, don’t take dark money, & enforce integrity policies on all ad buyers https://t.co/VCySWJkDZa

— Alex Howard (@digiphile) June 17, 2020

I can’t believe how much ink the press is giving Facebook for this spineless move that essentially is a user option which will be mostly set by the most sophisticated users and mostly ignored by those most vulnerable to disinfo. https://t.co/PdIYBNNM9r

— Jason Kint (@jason_kint) June 17, 2020

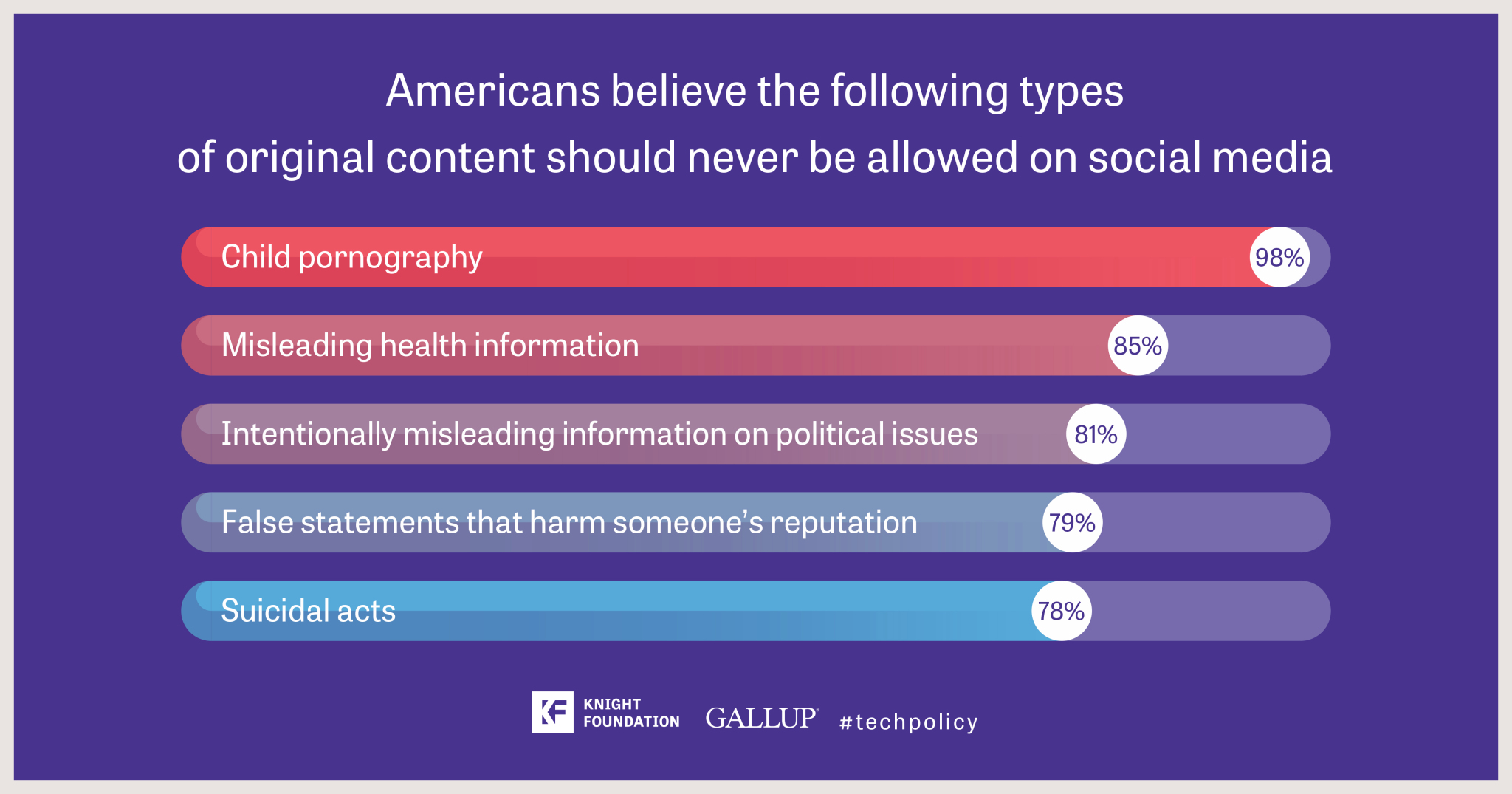

RISJ’s findings were echoed by those in a new report from Gallup/Knight that found that even though Americans don’t trust social media companies to police the content on their platforms, they also think false and misleading information should be removed from those platforms:

“If someone’s going to take on critics of vaccines in a strong and aggressive way, it’s probably going to have to be someone outside an official government public health agency.” Wired’s Megan Molteni writes about the Public Good Projects, a public health nonprofit that this week launched an initiative called Stronger that “aims to take the fight to anti-vaccine organizers where they’ve long had the upper hand: on social media. To do so, PGP plans to conscript the vast but largely silent majority of Americans who support vaccines into any army of keyboard warriors trained to block, hide, and report vaccine misinformation.” (PGP CEO Joe Smyster noted recently that anti-vaccine messaging on social media has roughly tripled since the pandemic began.) Here’s how it’ll work:

PGP plans to recruit people who think vaccines are a vital public good and who have a track record of activism — doing things like signing petitions or attending demonstrations. Those people will sign up to receive information about how to combat vaccine-related misinformation when they see it. When a particular piece of misinformation is either about to be seen by millions of people, or has just reached that point, alerts will go out from PGP telling these volunteers what to look for and recommending language for counter-posting. Their idea is to create an army of debunkers who can quickly be mobilized into action. Having enough people is paramount; there’s safety in numbers when posting on a topic that’s likely to spawn a vigorous online battle.