Our Duke Tech & Check team has made great progress automating fact-checking, but we recently had a sobering realization: We need human help.

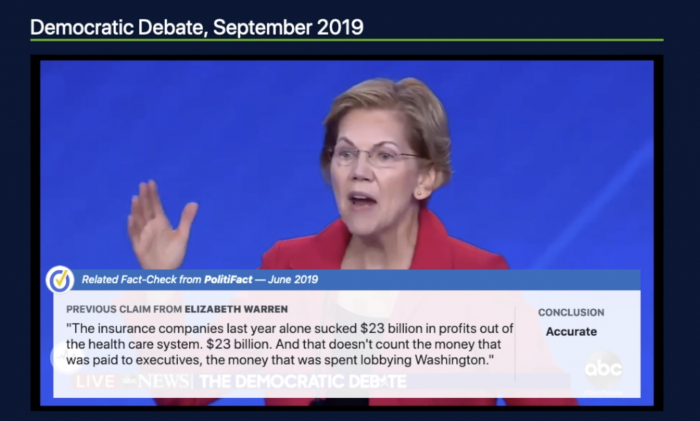

We’ve built some impressive tools since we started three years ago. Every day our bots send dozens of journalists our Tech & Check Alerts — emails with factual claims that politicians have made on cable TV or Twitter, which aid the journalists in finding new claims to check.We also invented Squash, a groundbreaking experimental video app that displays relevant fact-checks during a speech or a debate when a candidate or elected official repeats a claim that has been checked before.

But for all that progress, we’ve realized that human help is still vital.

It’s an important discovery not just for automation in fact-checking, but for similar efforts in other journalistic genres. We’ve found that artificial Intelligence is smart, but it’s not yet smart enough to make final decisions or avoid the robotic repetition that is an unfortunate trait of, um, robots.

In the case of Squash, we need humans to make final decisions about which fact-checks to display on the screen. Our voice-to-text and matching algorithms are good — and getting better — but they’re not great. And sometimes they make some really bad matches. Like, comically bad.

Depending on the quality of the audio and how often the person speaking has been fact-checked, Squash might find anywhere from zero (Andrew Yang) to several dozen (President Trump) matching fact-checks during a speech. But even with Trump, many of those matches won’t be relevant. For example, Trump might make a claim about space travel, but Squash will mistakenly match it with a previous fact-check on the bureaucracy of building a road.

We’re making progress to improve those matches, which depend on a database of fact-checks marked with ClaimReview, a tagging system we helped develop that search engines and social platforms use to find relevant articles. A new approach we’re exploring uses subject tagging to improve our hit rate. And in the meantime, we’ve also taken a back-to-the-future approach by bringing humans into the equation.

The idea is that instead of leaving the decision entirely to Squash’s AI, we have it suggest possible matches and then let humans make the final call. Our lead technologist Christopher Guess and his team have built a new interface that allows us to do that by weeding out bad matches like that non-relevant fact-check on road building. We call the new tool Gardener.

We’ve just begun tests with Gardener, but the early results are promising.

We had a similar epiphany about Tech & Check Alerts, our automated product that sends claims to journalists. We decided that it, too, needed a human touch.

The algorithm we use, ClaimBuster, dutifully found claims in transcripts from cable news and Twitter, and our programs automatically sorted the most promising statements to fact-check, compiled them into an email and sent it to editors and reporters at the Washington Post, New York Times, Associated Press and several other fact-checking organizations. We also produced a local version for fact-checkers in North Carolina.

The claims our “robot intern” selected each day weren’t always perfect. But the process distilled 24 hours’ worth of talking heads and thousands of tweets into a skimmable newsletter, with a short list of potential statements that might be worth examining. And fact-checkers have used our daily, automated alerts to do exactly that.

But we noticed a problem: the journalists weren’t using our email as much we had hoped. It looked the same every day and it was cluttered with statements that were not useful for them — including statements made by cable news reporters, whose statements were of little to no interest for the fact-checkers. In an overstuffed inbox, these automated emails were easy to ignore or delete.

So we brought in a human. We hired Duke student Andrew Donohue to choose the most promising claims from the bot’s list of claims and some other sources and compose a separate email that we called “Best of the Bot.” We gave it personality and occasionally made fun of ClaimBuster. (We were gentle, though. It’s wise to be nice to our future robot overlords!)

We combined automation and personalization. Donohue chose a claim or two to highlight for the same distribution list that already received the automated alerts.

We sent the first Best of the Bot on Sept. 13, 2019 — about a Twitter thread on the Obama administration’s immigration record that Joe Biden’s campaign posted during a Democratic presidential debate the previous night. “The Obama-Biden Administration did not conduct workplace raids — in fact, it ended them,” one of the tweets claimed.

PolitiFact staffers, alerted to it by Donohue’s Best of the Bot email, looked into the claim and rated it “Half True.”

As of July 2020, these alerts are now sent to more than two dozen journalists at eight U.S. fact-checking projects.

We had reason to think that fact-checkers would respond well to this based on some of our earlier efforts at human curation.

One example involved a tweet by Kanye West related to his famous Oval Office visit to the White House in 2018. The statement was about a Wisconsin company, so we shared it with the Milwaukee Journal Sentinel, PolitiFact’s long-term state partner. The claim had already appeared in our automated reports to fact-checkers. But in this case our personal outreach to the fact-checkers at the Journal Sentinel, following up on the automated alert, led to a published fact-check.

We also experimented with curated tip sheets to The News & Observer, PolitiFact’s North Carolina partner in the 2018 campaign. Unlike our national alerts, this was not an entirely automated service: In addition to scraping tweets, we enlisted student researchers to help us monitor social media posts and political ads. Then a very human intermediary, Cathy Clabby, an N&O alum who now is a Duke faculty member and an editor at the Reporters’ Lab, summarized the best claims we found in a personalized email to the newspaper’s reporters and editors. The results: many published fact-checks.

Our experiences with these kinds of personalized tips inspired the Best of the Bot Alerts. But there would be no “best of” without the bot. Our staff could not generate these semi-daily messages without the lists of statements that our scrapers and ClaimBuster find for us each day. While our customers — the nation’s fact-checkers — still seem to prefer human selection and editing to distill the bot’s findings into a product that busy journalists can use, automation is what makes that human touch possible.

Our goal with Tech & Check, which is funded by Knight Foundation, the Facebook Journalism Project, and Craig Newmark, is to use automation to help fact-checking. But we’ve found that AI isn’t quite smart enough yet. It can’t always find the most relevant matches for Squash or generate lively emails. Bots are good at repetitive tasks, but for now, they still need help from us.

Bill Adair and Mark Stencel are co-directors of the Duke University Reporters’ Lab.