One idle Saturday afternoon I wreaked havoc on the virtual town of Harmony Square, “a green and pleasant place,” according to its founders, famous for its pond swan, living statue, and Pineapple Pizza Festival. Using a fake news site called Megaphone — tagged with the slogan “everything louder than everything else” — and an army of bots, I ginned up outrage and divided the citizenry. In the end, Harmony Square was in shambles.

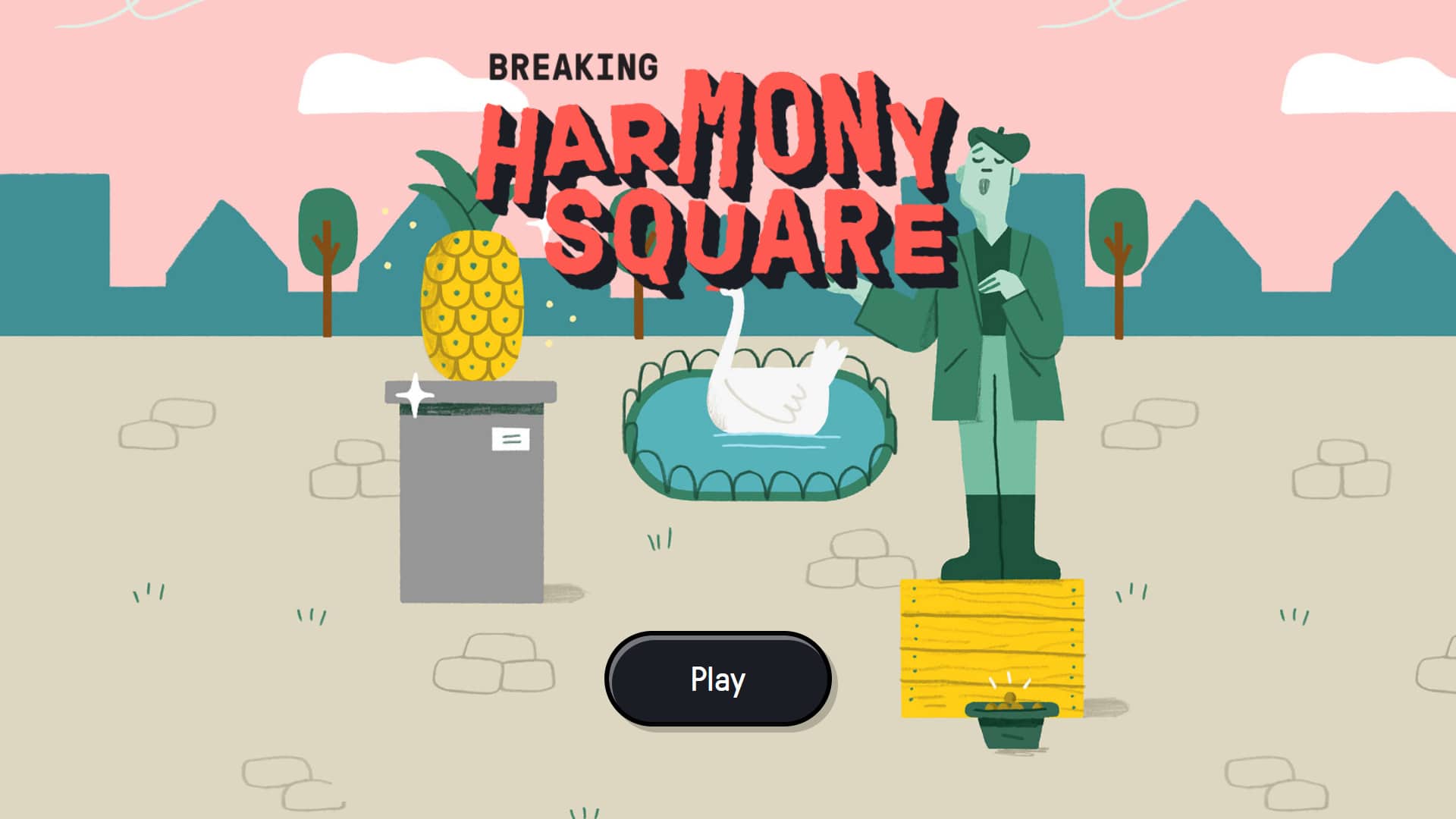

I thoroughly enjoyed my 10 minutes of villainy — even laughed outright a few times. And that was the point. My beleaguered town is the center of the action for the online game Breaking Harmony Square, a collaboration between the U.S. Departments of State and Homeland Security, psychologists at the University of Cambridge in the United Kingdom, and DROG, a Dutch initiative that uses gaming and education to fight misinformation. In my role as Chief Disinformation Officer of Harmony Square, I learned about the manipulation techniques people use to gain a following, foment discord, and then exploit societal tensions for political purposes.

The game’s inventors use a psychological concept borrowed from immunology: inoculation. The gameplay acts like a vaccine, so the next time people encounter such manipulation in the real world, they’ll recognize it for what it is. Research shows that people who play the games are better than controls at spotting manipulation techniques, more confident in their judgments, and less likely to share misinformation. Indeed, although I was already familiar with some of the strategies, I found that playing the game heightened my awareness of manipulative posts the next time I logged into Facebook and Twitter.

It’s a lighthearted approach to a deadly serious problem as the manipulated digital world increasingly intrudes on real life. “We’re not just fighting an epidemic; we’re fighting an infodemic,” said Tedros Adhanom Ghebreyesus, director-general of the World Health Organization, in a prescient speech before the Munich Security Council as the novel coronavirus SARS-CoV-2 was racing around the globe last February. “Fake news spreads faster and more easily than this virus and is just as dangerous.”

Academics and journalists alike have documented how people who get news from social media and instant messaging apps have been much more likely to believe false claims about Covid-19 and the vaccines developed to combat it. Likewise, researchers have described how social media’s amplification of lies about a stolen election helped incite the Jan. 6 insurrection at the U.S. Capitol.

And while most of us can likely spot obviously fake news or a meme meant to ping our emotions, many people don’t realize how intensely manipulated the digital world actually is. “Computational propaganda — the use of algorithms, automation, and big data to shape public life — is becoming a pervasive and ubiquitous part of everyday life,” write Samantha Bradshaw, now a fellow at the Stanford Internet Observatory, and Philip Howard, now a professor of internet studies at the University of Oxford, in a 2019 report detailing organized social media manipulation. The researchers found evidence of political parties and government agencies in 70 countries using some of the same tactics I used against the citizens of Harmony Square — amassing bot followers, creating fake news, and amplifying disinformation on social media.

One strategy to combat misinformation is to apply techniques such as inoculation to help people become more resilient digital citizens, according to an analysis published in the journal Psychological Science in the Public Interest last December. “The idea is to empower people to make their own decisions — better decisions — by giving them simple tools or heuristics, simple rules that can help them,” said lead author Anastasia Kozyreva, a research scientist at the Max Planck Institute for Human Development in Berlin.

“Human psychology is full of vulnerabilities, but also full of good things,” said Kozyreva. The psychological sciences can be helpful in designing environments that preserve autonomy while providing cues that help rather than manipulate people.

Historically, people struggle to adapt to a new technology — even to bicycles in the 19th century, said Irene Pasquetto, an assistant professor at the University of Michigan School of Information. “There was this backlash against bicycles and how dangerous they were and how they could disrupt the local economy,” she said. Far faster than walking and cheaper than horse and buggy, cycling became such a popular activity that restaurants and theaters claimed it was costing them business. Doctors, church leaders, and others thought bicycles morally and physically dangerous for women, who donned bloomers and enjoyed a newfound mobility. Of course, Pasquetto points out, society eventually adapted to bicycles, as it would to the radio, telephones, cars, and so on.

But the digital environment is unique in how rapidly it has consumed our lives, said Kozyreva. The World Wide Web is only 32 years old; the rise of social media just happened in the last two decades. “I don’t think that we have ever seen such a drastic — and not even gradual — change as in the case of the internet,” she said. More than half the world is now online, she points out, and people increasingly base choices on the information they get in the digital realm.

What most people don’t realize is that those choices are intensely manipulated through technologies that filter and mediate information and steer their online behavior, said Filippo Menczer, a professor of informatics and computer science at Indiana University. In the span of a decade, we’ve gone from a model where most people accessed information through trusted intermediaries, such as newspapers or the evening news, to now getting it through social media, he said. But that world is structured so that inaccurate information can become popular very easily and then ranking algorithms boost it even further. And platforms are constantly changing the rules and tweaking their secret algorithms, said Menczer. “It is completely impossible even for researchers — let alone any single user — to understand how and why they are being exposed to one particular piece of information and not another.” All of this, he added, “is changing at rates that are orders of magnitude faster than our brain could possibly adjust.”

To tip the balance of power back towards the individual, Kozyreva and colleagues suggested using cognitive and behavioral interventions such as inoculation to boost people’s control over their digital environment. Menczer is also a fan of this approach. Under his direction, the Observatory on Social Media at Indiana University has developed several tools and games to pull back the curtain on how misinformation spreads on social media.

These tools include Hoaxy, a search engine that visualizes how claims spread on Twitter, and Botometer, which shows how much social media activity is likely due to the automated computer programs called bots. In a study published in Science in 2018, researchers at the Massachusetts Institute of Technology used the software behind Botometer to help track the spread of 126,000 stories — verified independently as either true or false — on Twitter. They discovered that lies travel farther and faster than truth in all categories of information and that the effects were most pronounced for political news. Similarly, the Pew Research Center used the Indiana University tool to show that bots post nearly two-thirds of the links shared on Twitter.

Kozyreva and her team also advocate applying a concept from behavioral economics known as “nudging”— designing environments to steer people towards better choices — to create a healthier digital space. They detail a long list of actions: turning off notifications, moving distracting apps off the home screen (or deleting them), tightening up privacy settings, using programs such as Freedom or Boomerang to selectively control apps, and just staying the heck away from sites such as Facebook where misinformation flourishes.

Michael Caulfield, director of blended and networked learning at Washington State University Vancouver, said that he often hears that people who are easily duped by fake news lack critical thinking skills. That’s a misperception, he argues. It used to be that information largely came wrapped in a news story that provided context. But today these bits of information, data, and statistics come unbundled from the facts and circumstances surrounding them, so that even when people try to do their research, it’s hard to piece it together accurately, said Caulfield. “The information is not really decipherable and they’ve kind of been sold a false bill of goods that they are going to be able to crack the code.”

Caulfield, Kozyreva, and other experts recommend boosting people’s ability to evaluate the reliability of information by teaching them simplified versions of strategies used by fact-checkers. Caulfield says he has had great success teaching undergraduate students to quickly evaluate a source in four moves. “As soon as they have context, they are actually pretty good at saying, ‘Oh, I trust it at this level for these reasons,” he said.

All of these techniques are grounded in the idea that people want to be in on the game of internet manipulation and to, in some cases, challenge their beliefs. “Humans have an almost innate ability to despise manipulation and lies,” Kozyreva said.

But Pasquetto, who helped found the Harvard Kennedy School Misinformation Review when she was a postdoctoral fellow there, isn’t so sure. Kozyreva and colleagues “seem to assume that people who are disinformed are aware of it and want not to be disinformed going forward,” said Pasquetto. “I don’t think that’s necessarily the case.”

No one I talked to thinks that behavioral and cognitive interventions alone will protect people from manipulative forces on the internet. “It’s important not to say that we have found a silver bullet, or that now that we have cognitive tools that will teach people digital literacy everything will be fine,” said Kozyreva. “No, nothing will be fine because, obviously, the forces in play are so big and these interventions usually have quite small effects.”

Caulfield is concerned that his efforts will be co-opted by forces who use educational progress as a reason not to deal with issues at a policy level. Just because you have driver’s education doesn’t mean that you abolish driving laws, he pointed out. “All things considered, it’s probably the laws that keep you safer than driver’s ed.”

Ultimately, Pasquetto said she thinks humans will adapt to the internet as they have with other technological innovations. “I see civil society fighting really hard to regulate the internet and rethink how it is designed,” she said. “And then I see media literacy scholars working on designing new curriculum to explain to kids how to deal with online media. I see so much interest in society into fixing the problem.” (The Dutch and U.K. teams that worked on Breaking Harmony Square, for example, also developed a similar game called Bad News, along with a junior version for children aged 8 to 11.)

We can’t simply throw up our hands, said Caulfield. “Cynicism is probably more destructive to democracy than individual lies.” In fact, he said, the goal of many disinformation campaigns is to create an impenetrable fog of competing facts so that people give up and think: “Who’s to say what’s true or false?”

“If you remove truth from the equation, you start to live in a world where authoritarianism is really the only path forward,” he said. “If you lose truth, you end up with power.”

We humans crave novelty and fun, so maybe our quest for truth can start with a game. In Breaking Harmony Square, the better you are at using manipulative tactics online, the more likes and followers you earn. My final score was 58,868 followers, but I bet I could beat that if I played another round. Can you?

Teresa Carr is a Texas-based investigative journalist and the author of Undark’s Matters of Fact column. This article was originally published on Undark.