The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

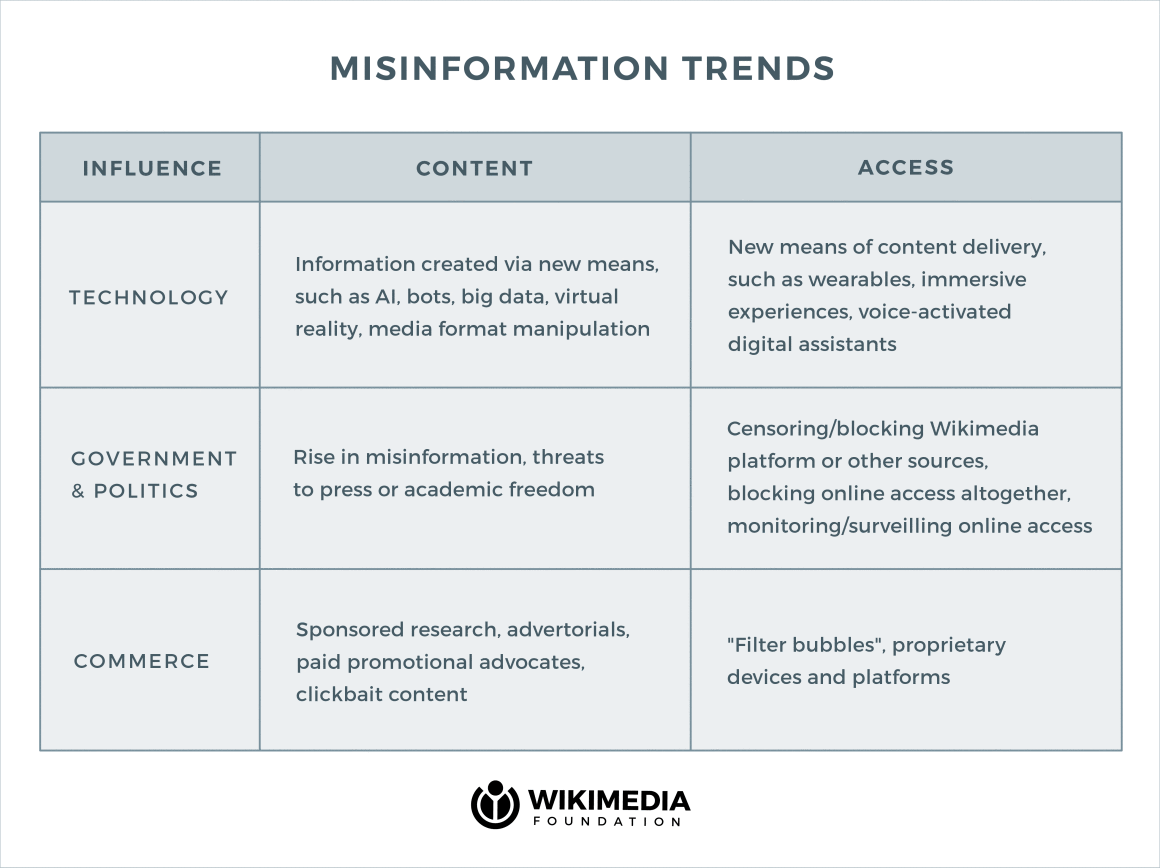

What will misinformation look like in 2030? A team of researchers worked with the Wikimedia Foundation to consider the future of fake news and propaganda. Here’s the framework they came up with:

The full report is here and includes some ideas for how Wikipedia can fight “misinformation and censorship in the decades to come:

— Encourage and embrace experiments in artificial intelligence and machine learning that could help enrich Wikipedia content.

— Track developments in journalism and academia for new ways to fact-check and verify information that may be used as sources for Wikimedia platforms, such as evaluating video or other new media, also valuable for content.

— Collaborate with other public interest organizations to advocate for press freedom, free speech, universal internet access, and other policy goals that ensure access and the free flow of information.

— Continue to monitor carefully access to Wikimedia platforms from around the globe, deploying technical changes where appropriate.

— Monitor the solutions being developed by commercial platforms and publications, both to see how they might be applied to improving content verification methods on Wikimedia platforms, and might offer opportunities for increasing access to that content.

this, by @drawandstrike on pro-trump media and fake news is fascinating and something i've been thinking about a lot https://t.co/eTQuPBvbpi

— Charlie Warzel (@cwarzel) July 17, 2017

“Audio from an Obama impersonator to achieve near-perfect results.” Researchers at the University of Washington figured out how to use AI techniques to create fake news video of former President Barack Obama “saying things he’s said before, but in a totally new context,” reports Karen Hao for Quartz:

Using a neural network trained on 17 hours of footage of the former US president’s weekly addresses, they were able to generate mouth shapes from arbitrary audio clips of Obama’s voice. The shapes were then textured to photorealistic quality and overlaid onto Obama’s face in a different “target” video. Finally, the researchers retimed the target video to move Obama’s body naturally to the rhythm of the new audio track.

It’s a labor-intensive process that requires a lot of video footage — and, for now, a subject looking directly at a camera. But “though the researchers used only real audio for the study, they were able to skip and reorder Obama’s sentences seamlessly and even use audio from an Obama impersonator to achieve near-perfect results.” The original project is here.

Of course, you can make fake video in other ways too.

93,000 people currently watching this fake Facebook Live of a heavily edited GIF https://t.co/58KHiu6NGP pic.twitter.com/iuJiJDcx2m

— Matt Navarra ⭐️ (@MattNavarra) July 20, 2017

And our 2017 brains, at least, are bad at spotting it. A few different studies are out in the past couple weeks about people’s inability to detect various kinds of fake news. One, written up by my colleague Shan, found that people aren’t good at either recognizing that images are fake or at identifying where photos have been changed (even in photos showing implausible scenes). Think you’d be better at it? Take this Washington Post quiz based off the study and see how you do. I and others at Nieman Lab did quite badly, but some of us noted that the images weren’t as “zany” as you might hope in a quiz like this — one of the manipulations was adding a water pipe to a wall in the background of a picture, which, come on. Ricardo: “Why does it matter that a boat was edited in? Show actual news-based photos.” Maybe we’re just defensive! But I mean:

Dominik Specula, a researcher at the University of British Columbia, wrote for The Washington Post’s Monkey Cage blog about his experiment showing 700 political science students “images of banners of actual news websites” as well as “logos from three fake news sites.” All they looked at was the logos, not any content. They were then asked to rank the sites from a scale of 0 to 100 with 100 being most “legitimate.”

It seems a little unreasonable to expect people to identify fake news sites based on the site’s name alone; I also don’t know how preexisting conceptions of FoxNews.com and Breitbart fit in here. Also, what does “legitimate” really mean, etc.?

A small change on Facebook could help stop fake news from spreading. Non-publisher Facebook pages will no longer be able to overwrite the default information in link previews (headline, description, image), the company announced. (Facebook had explained last month: “By removing the ability to customize link metadata [i.e. headline, description, image] from all link sharing entry points on Facebook, we are eliminating a channel that has been abused to post false news.”) At TechCrunch, Josh Constine pointed out, “The change could allow Facebook to dismantle some of the infrastructure that allows the mass distribution of fake news without having to make judgment calls about each piece of content individually.”

“Media platforms should be considered media companies.” The Polish social scientist Konrad Niklewicz, in a paper for the center-right, European People’s Party thinktank the Wilfried Martens Centre for European Studies, proposes “a novel way of fighting fake news”: State-imposed legislation regarding the measures that social media companies have to take to combat fake news on their platforms. Social media sites should be considered news sites, Niklewicz argues, and existing European press laws should be applied to them:

If press laws were to be applied, social media platforms, like the traditional press, would have to correct (or take down) false information at the request of the genuinely affected party. Should the platform decide to ignore the request (which it would be entitled to do), the affected party would have the right to refer the case to an independent court, exactly as is the case with newspapers in most EU countries. The same right of referral to the courts should be guaranteed to the authors of the removed content, allowing them to try to prove that there is nothing wrong with the contested item.

The procedure of correction should be similar (in its results) to the one applied to print publications. Any party notifying the platform should receive an obligatory confirmation of receipt. Once notified, the company (platform) would have to choose one of the following alternative courses of action: (1) do nothing and face the possible eventuality of being referred to the court; (2) fact-check the item concerned and then decide whether it should stay, be corrected or be deleted; (3) delete the item outright, based on an in-house assessment (for example, by the in-house fact-checking team); or (4) ask the author of the content, if identifiable, to correct the information, under threat of deletion if it is not corrected.

In this framework, the contested item could be corrected either by the author(s) or by the social media platform itself. It is important to retain this option. As already explained, many fake news stories are created intentionally, by agents that cannot be coerced into deleting them (because they are the functionaries of a foreign country’s secret service apparatus, for example). In such a case, the platform must take responsibility and intervene without the authors’ consent. Depending on the platforms’ technological capacities, an additional element to the procedure might be considered as well: an obligation to distribute the corrected content to exactly the same audience as that which read the fake story in the first place. Such an obligation would mimic the obligations imposed in some countries on the printed press, which require it to publish the correction in the same medium, in the same place as the false information it has corrected.

File this one under “it’s amazing that this is a center-right position in Europe and it’ll never ever happen in the U.S. but still interesting to read since Facebook fact-checking initiatives are so boring to read about by now.” Plus, actions that Facebook is forced to take in Europe may eventually affect the way the company does business in the U.S.