There’s no end to the need for fact-checking, but fact-checking teams are usually small and struggle to keep up with the demand. In recent months, organizations like WikiTribune have suggested crowdsourcing as an attractive, low-cost way that fact-checking could scale.

As the head of automated fact-checking at the U.K.’s independent fact-checking organization Full Fact, I’ve had a lot of time to think about these suggestions, and I don’t believe that crowdsourcing can solve the fact-checking bottleneck. It might even make it worse. But — as two notable attempts, TruthSquad and FactcheckEU, have shown — even if crowdsourcing can’t help scale the core business of fact checking, it could help streamline activities that take place around it.

Think of crowdsourced fact-checking as including three components: speed (how quickly the task can be done), complexity (how difficult the task is to perform; how much oversight it needs), and coverage (the number of topics or areas that can be covered). You can optimize for (at most) two of these at a time; the third has to be sacrificed.

High-profile examples of crowdsourcing like Wikipedia, Quora, and Stack Overflow harness and gather collective knowledge, and have proven that large crowds can be used in meaningful ways for complex tasks across many topics. But the tradeoff is speed.

Projects like Gender Balance (which asks users to identify the gender of politicians) and Democracy Club Candidates (which crowdsources information about election candidates) have shown that small crowds can have a big effect when it comes to simple tasks, done quickly. But the tradeoff is broad coverage.

At Full Fact, during the 2015 U.K. general election, we had 120 volunteers aid our media monitoring operation. They looked through the entire media output every day and extracted the claims being made. The tradeoff here was that the task wasn’t very complex (it didn’t need oversight, and we only had to do a few spot checks).

But we do have two examples of projects that have operated at both high levels of complexity, within short timeframes, and across broad areas: TruthSquad and FactCheckEU.

TruthSquad, a pilot project to crowdsource fact checking run in partnership with Factcheck.org, received funding from the Omidyar Network in 2010. The project’s primary goal was to promote news literacy and public engagement, rather than to devise a scalable approach to fact-checking. Readers were asked to rate claims and then, with the aid of a moderating journalist, fact checks were produced. Project lead Fabrice Florin concluded, in part:

Despite high levels of participation, we didn’t get as many useful links and reviews from our community as we had hoped. Our editorial team did much of the hard work to research factual evidence. (Two-thirds of story reviews and most links were posted by our staff.) Each quote represented up to two days of work from our editors, from start to finish. So this project turned out to be more labor-intensive than we thought.

The project was successful in engaging readers to take part in the fact-checking process, but it didn’t prove to be a model for producing high-quality fact checks at scale.

FactCheckEU, meanwhile, was run as a fact-checking endeavor across Europe by Alexios Mantzarlis, co-founder of Italian fact-checking site Pagella Politica, who now leads Poynter’s International Fact-Checking Network.

“There was this inherent conflict between wanting to keep the quality of a fact check high, and wanting to get a lot of people involved,” Mantzarlis told me. “We probably erred on the side of keeping the quality of the content high, and so we would review everything that hadn’t been submitted by a higher-level fact-checker.”

Fact checking includes a few different stages. Looking at these stages can help us figure out why crowdsourced fact-checking hasn’t proven successful.

Monitoring and spotting. This involves looking through newspapers, TV, social media, and so on to find claims that can be checked and are worthy of being checked. The decision of whether or not to check a specific claim is often made by an editorial team.

If claims are submitted and decided upon by the crowd, you have to ensure the selection of claims is spread fairly across the political spectrum and are sourced from a variety of outlets. That leads to a few concerns:

— Will opposition parties always have a vested interest in upvoting claims from those in power? Will this skew the total set of fact checks produced?

— Will well-resourced campaigns upvote the claims that best serves their purposes?

— The kinds of people who volunteer to help fact check are a naturally self-selecting group.

Also, Mantzarlis said, “interesting topics would get snatched up relatively fast.” What does that mean for the more mundane but still important claims?

Checking and publishing. This stage encompasses the research and writeup of the fact check. Primary sources and expert opinions are consulted to understand the claims, available data, and their context. Conclusions are synthesized, and content is produced to explain the situation clearly and fairly. TruthSquad and FactcheckEU both discovered that this part of the process required more oversight than they’d expected.

“We were, at the peak, a team of three,” Mantzarlis said. “We foolishly thought that harnessing the crowd was going to require fewer human resources, when in fact it required, at least at the micro level, more.” The editing process, which ensures a level of quality, was, in this case, a key demotivator.

“Where we really lost time, often multiple days, was in the back and forth,” Mantzarlis said. “It either took more time to do that than to write it up ourselves, or they lost interest.”

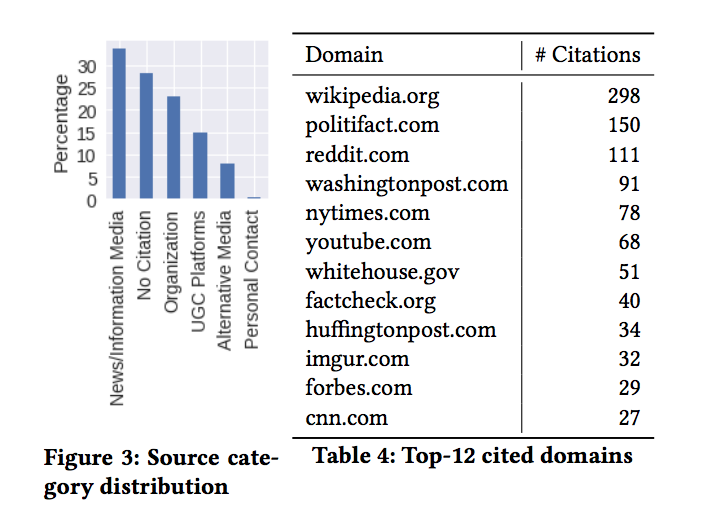

In addition, at Full Fact and many other fact-checking organizations, fact checking starts from primary sources — but this may not be the crowd’s inclination. A recent analysis of Reddit’s /r/politicalfactchecking subreddit argued that the activity on that channel is a good example of crowdsourced fact-checking. But most of the citations in the fact checks produced by Redditors came from secondary sources like Wikipedia, not primary sources. That’s important because it means efforts like this don’t actually scale fact checking — they just re-post something that’s been checked already.

The other bits. After research and content production, there are various activities that take place around the fact check. These include asking for corrections to the record, doing promotion on social media, turning fact checks into different formats like video, or translating them into different languages. “Most of the success we got harnessing the crowd was actually in the translations,” Mantzarlis said. “People were very happy to translate fact checks into other languages.”

Mevan Babakar is the digital product manager at Full Fact. This post is adapted from a longer post about crowdsourced fact checking that originally ran on Full Fact’s blog. Read it here.