The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

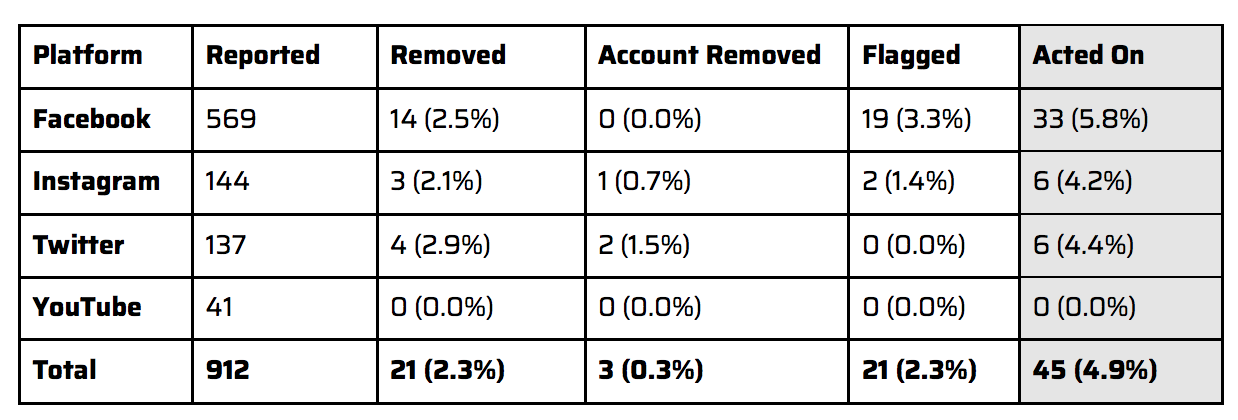

“Even when companies are handed misinformation on a silver platter, they fail to act.” The nonprofit Center for Countering Digital Hate and global agency Restless Development trained young volunteers to identify, record, and report vaccine misinformation on Facebook, Instagram, YouTube, and Twitter.” The platforms’ responses were not impressive: “Of the 912 posts flagged and reported by volunteer [between July 21 and August 26], fewer than 1 in 20 posts containing misinformation were dealt with (4.9%).”

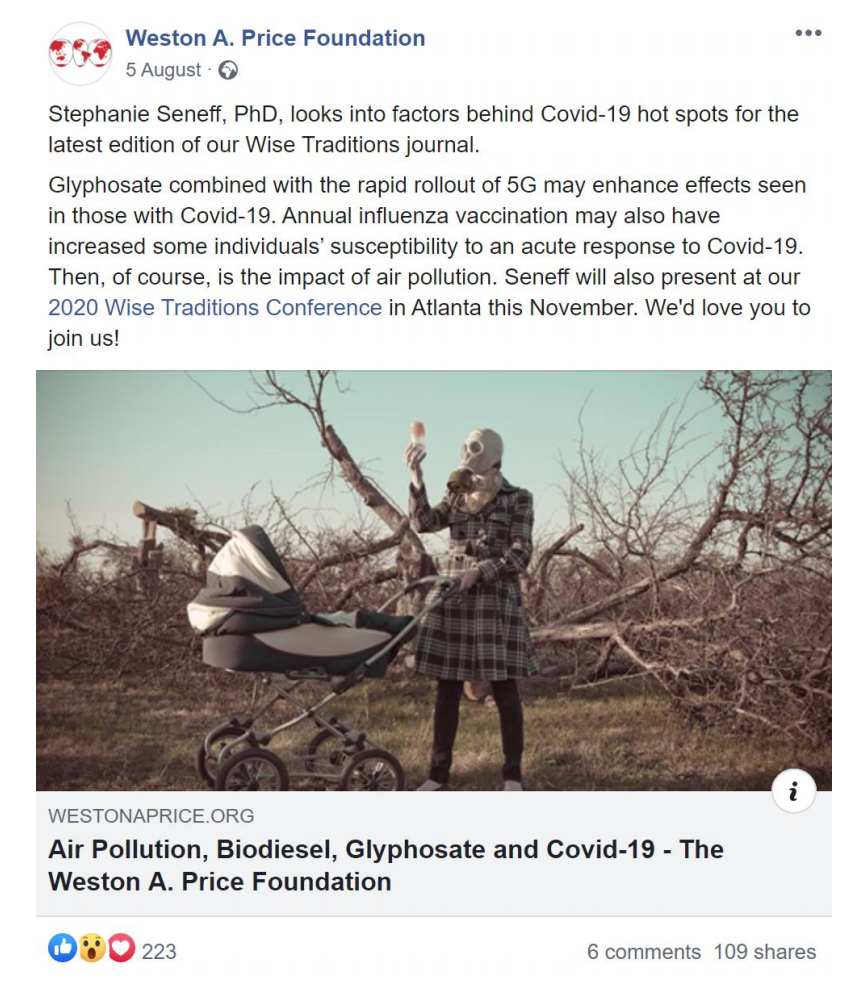

Many of the posts were about a coronavirus vaccine. More than a tenth of posts in the sample referred to Bill Gates in some way. Others suggest that 5G and the annual flu vaccine worsen Covid-19 symptoms, that a coronavirus vaccine will change people’s DNA, and that the government is trying to kill people of color with the vaccine. Here’s one example, and there are more in the report:

In June, the Center for Countering Digital Hate had performed a similar exercise with Covid-related misinformation on social platforms — identifying it, reporting it, and tracking what happened. At the time, they found that the platforms removed fewer than one in 10 of the posts reported.

Following publication [of that report], Facebook requested and we supplied a complete list of the misinformation posts our volunteers had collected.

For this report, we revisited those posts to audit whether further action was taken in the last three months[…]while some further action was taken, three quarters remains intact.

Despite requesting and receiving a full list of Facebook posts containing misinformation featured in our Will to Act report, the platform still only removed one quarter of the posts we identified as breaching their rules. These include posts claiming that Covid is a “bioweapon,” that it is “caused by vaccines” and various conspiracies about Bill Gates.

Facebook proved to be particularly poor at removing the accounts and groups posting misinformation, with just 0.3 percent banned.[…]

Twitter proved to be most effective in removing accounts, with 12.3% banned from the platform. This follows encouraging signs that Twitter is taking a proactive approach to removing and

flagging misinformation about coronavirus on its platform.Removal rates were notably poorer on Instagram than they were on Facebook, despite both companies sharing the same set of community standards and similar policies on Covid misinformation. This is particularly concerning given that this report shows Instagram remains a strong source of follower growth for anti-vaxxers.

The report comes out amid new promises from Facebook to quash misinformation ahead of the 2020 U.S. presidential election. On Thursday the company said, among other things, that it would “bar any new political ads on its site in the week before Election Day,” “strengthen measures against posts that tried to dissuade people from voting,” “quash any candidates’ attempts at claiming false victories by redirecting users to accurate information on the results,” “place a voting information center — a hub for accurate, up-to-date information on how, when and where to register to vote — at the top of its News Feed through Election Day,” “remove posts that tell people they will catch Covid-19 if they vote,” remove posts that cause “confusion around who is eligible to vote or some part of the voting process,” and “limit the number of people that users [can] forward messages to in its Messenger app to no more than five people, down from more than 150.”

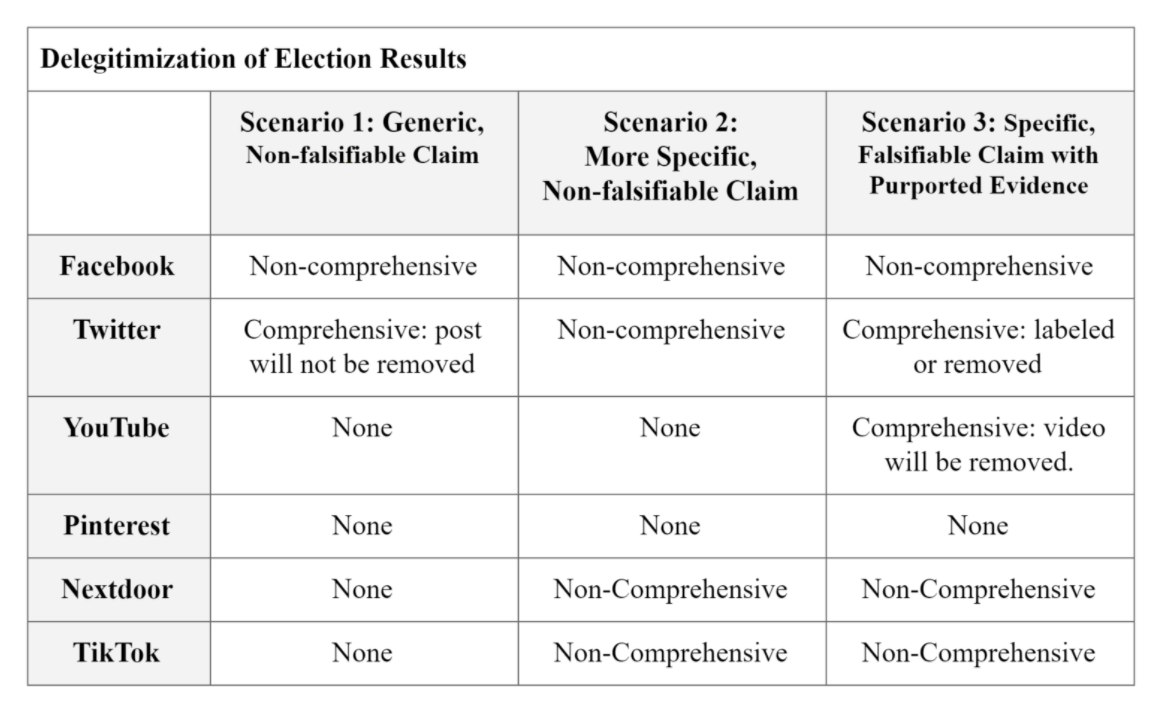

The social platforms’ policies are filled with gray areas that don’t always make it clear which types of election-related misinformation must be taken down. A recent report from the Election Integrity Partnership — a collaboration between the Stanford Internet Observatory and Program on Democracy and the Internet, Graphika, the Atlantic Council’s Digital Forensic Research Lab, and the University of Washington’s Center for an Informed Public — finds that “few platforms [out of 14 studied] have comprehensive policies on election-related content as of August 2020,” and that the category of misinformation that “aims to delegitimize election results on the basis of false claims” is particularly problematic because “none of these platforms have clear, transparent policies on this type of content, which is likely to make enforcement difficult and uneven.”

“Content that uses misrepresentation to disrupt or sow doubt about the larger electoral process or the legitimacy of the election can carry exceptional real-world harm,” the report’s authors conclude. “Combating online disinformation requires action to be taken quickly before content goes viral or reaches a large population of users predisposed to believe that disinformation. Quick and effective action will require the platforms to make decisions against a well-documented framework and for those decisions to be enforced fairly — without adjusting those actions due to concerns about the political consequences.”